External modules»

Those of our customers who are not yet using our private module registry may want to pull modules from various external sources supported by Terraform. This article discusses a few most popular types of module sources and how to use them in Spacelift.

Cloud storage»

The easiest ones to handle are cloud sources - Amazon S3 and GCS buckets. Access to these can be granted using our AWS and GCP integrations - or - if you're using private Spacelift workers hosted on either of these clouds, you may not require any authentication at all!

Git repositories»

Git is by far the most popular external module source. This example will focus on GitHub as the most popular one, but the advice applies to other VCS providers. In general, Terraform retrieves Git-based modules using one of the two supported transports - HTTPS or SSH. Assuming your repository is private, you will need to give Spacelift credentials required to access it.

Using HTTPS»

Git with HTTPS is slightly simpler than SSH - all you need is a personal access token, and you need to make sure that it ends up in the ~/.netrc file, which Terraform will use to log in to the host that stores your source code.

Assuming you already have a token you can use, create a file like this:

1 2 3 | |

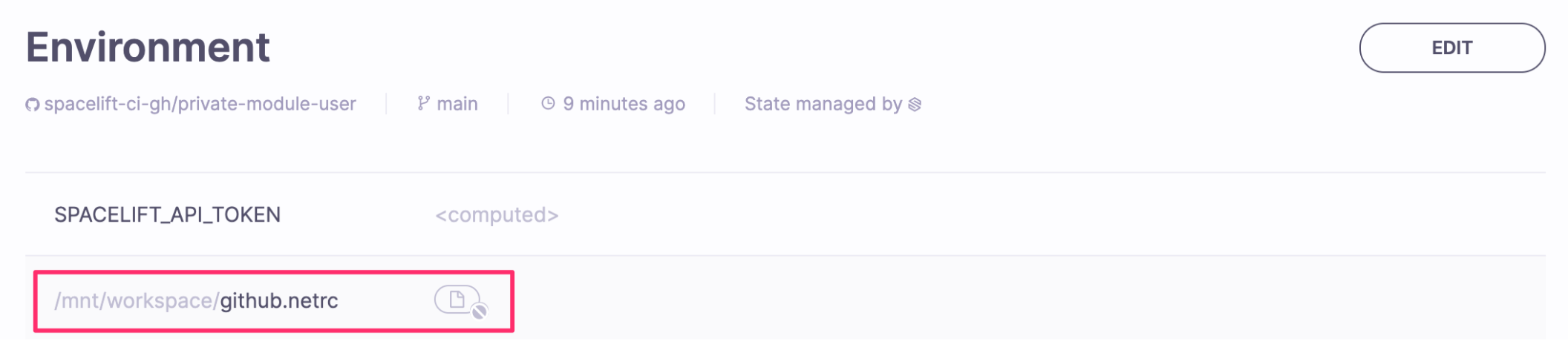

Then, upload this file to your stack's Spacelift environment as a mounted file. In this example, we called that file github.netrc:

Add the following commands as "before init" hooks to append the content of this file to the ~/.netrc proper:

1 2 | |

Using SSH»

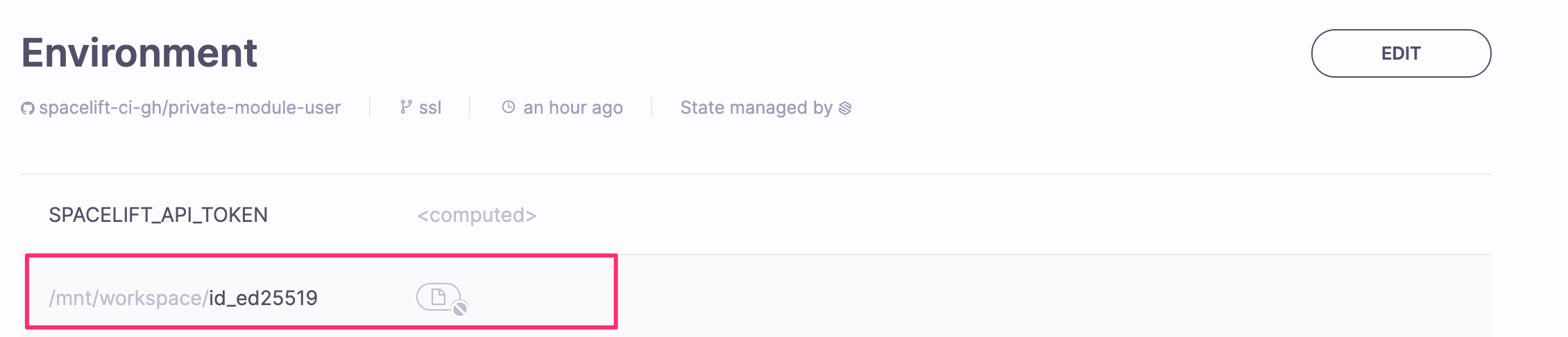

Using SSH isn't much more complex, but it requires a bit more preparation. Once you have a public-private key pair (whether it's a personal SSH key or a single-repo deploy key), you will need to pass it to Spacelift and make sure it's used to access your VCS provider. Once again, we're going to use the mounted file functionality to pass the private key called id_ed25519 to your stack's environment:

Warning

Please note: The file must explicitly be called id_ed25519, otherwise your runs will fail with a Permission denied error.

Add the following commands as "before init" hooks to "teach" our SSH agent to use this key for GitHub:

1 2 3 4 | |

The above example warrants a little explanation. First, we're making sure that the ~/.ssh directory exists - otherwise, we won't be able to put anything in there. Then we copy the private key file mounted in our workspace to the SSH configuration directory and give it proper permissions. Last but not least, we're using the ssh-keyscan utility to retrieve the public SSH host key for github.com and add it to the list of known hosts - this will avoid your code checkout failing due to what would otherwise be an interactive prompt asking you whether to trust that key.

Tip

If you get an error referring to error in libcrypto, please try adding a newline to the end of the mounted file and trying again.

Dedicated third-party registries»

For users storing their modules in dedicated external private registries, like Terraform Cloud's one, you will need to supply credentials in the .terraformrc file - this approach is documented in the official documentation.

In order to facilitate that, we've introduced a special mechanism for extending the CLI configuration that does not even require using before_init hooks. You can read more about it here.

To mount or not to mount?»

That is the question. And there isn't a single right answer. Instead, there is a list of questions to consider. By mounting a file, you're giving us access to its content. No, we're not going to read it, and yes, we have it encrypted using a fancy multi-layered mechanism, but still - we have it. So the main question is how sensitive the credentials are. Read-only deploy keys are probably the least sensitive - they only give read access to a single repository, so these are the ones where convenience may outweigh other concerns. On the other hand, personal access tokens may be pretty powerful, even if you generate them from service users. The same thing goes for personal SSH keys. Guard these well.

So if you don't want to mount these credentials, what are your options? First, you can put these credentials directly into your private runner image. But that means that anyone in your organization who uses the private runner image gets access to your credentials - and that may or may not be what you wanted.

The other option is to store the credentials externally in one of the secrets stores - like AWS Secrets Manager or HashiCorp Vault and retrieve them in one of your before_init scripts before putting them in the right place (~/.netrc file, ~/.ssh directory, etc.).

Info

If you decide to mount, we advise that you store credentials in contexts and attach these to stacks that need them. This way you can avoid credentials sprawl and leak.