VCS agent pools»

Info

This feature is only available to Enterprise plan. Please check out our pricing page for more information.

By default, Spacelift communicates with your VCS provider directly. However, some users may have special requirements regarding infrastructure, security, or compliance, and need to host their VCS system in a way that's only accessible internally where Spacelift can't reach it. This is where VCS agent pools come into play.

A single VCS agent pool is a way for Spacelift to communicate with a single VCS system on your side. You run VCS agents inside of your infrastructure and configure them with your internal VCS system endpoint. They will then connect to a gateway on our backend, and we will be able to access your VCS system through them.

Raw git

Raw git does not work with VCS agents. If you are using raw git repositories, you will need to use publicly accessible URLs or implement a workaround like a proxy or tunnel to replace VCS agent functionality.

On the VCS agent there are very conservative checks on what requests are let through and which ones are denied, with an explicit allowlist of paths that are necessary for Spacelift to work. All requests will be logged to standard output with a description about what they were used for.

Create the VCS agent pool»

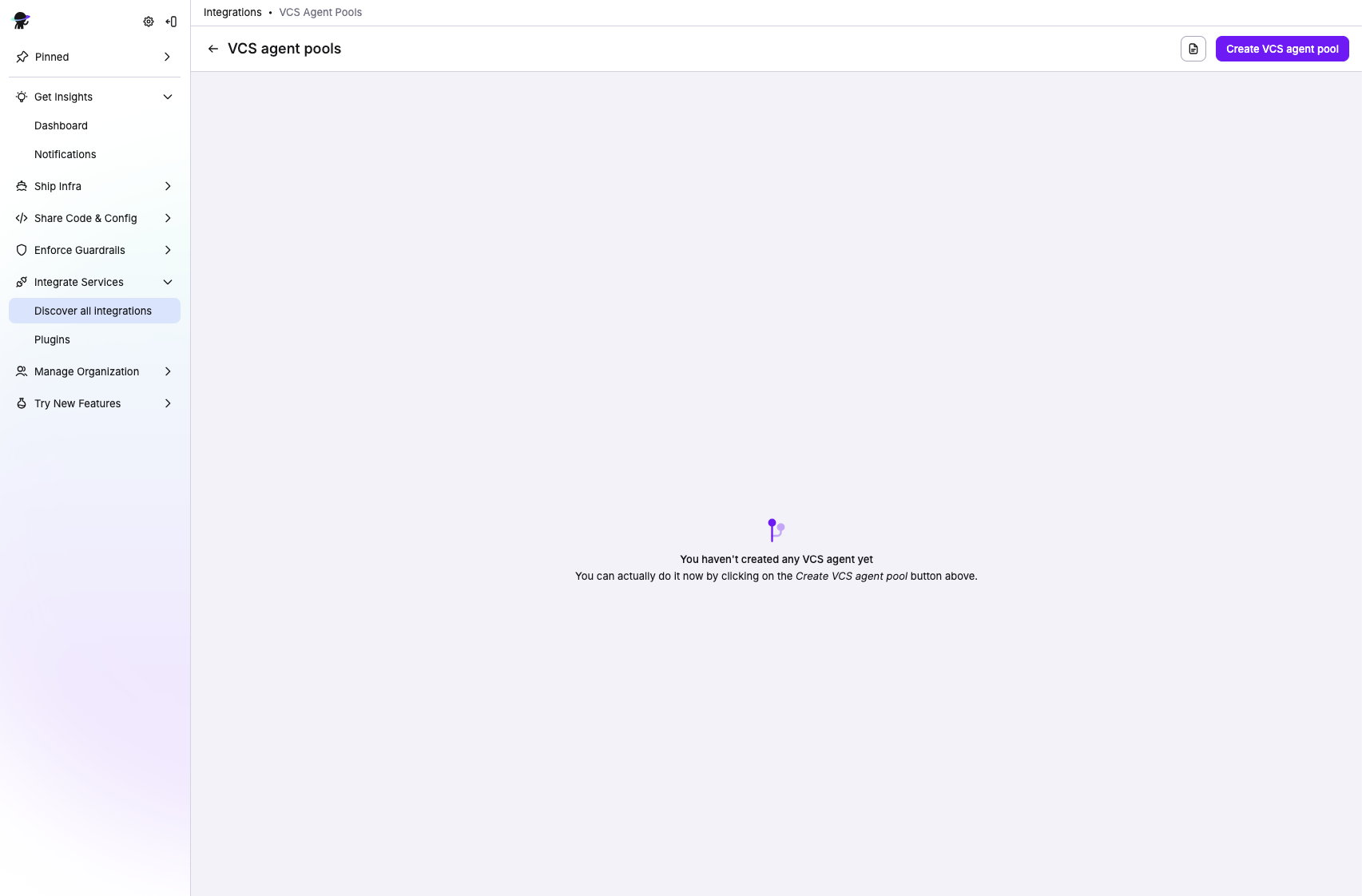

- Navigate to Integrate Services > Integrations.

- On the VCS Agent Pools card, click View.

- In the top-right corner, click Create VCS agent pool.

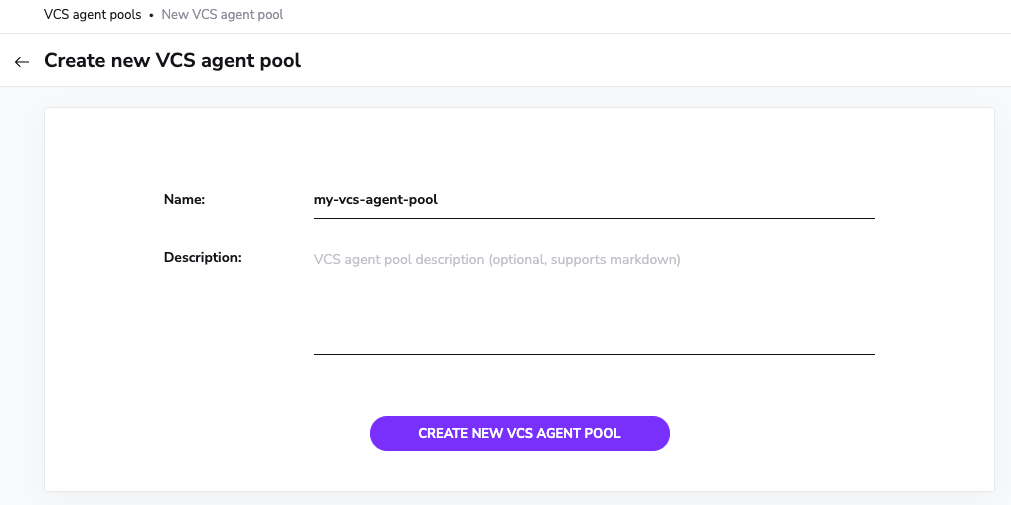

- Fill in the pool details:

- Name: Enter a unique, descriptive name for your VCS agent pool.

- Description (optional): Enter a (markdown-supported) description of the agent pool.

- Click Create new VCS agent pool.

A configuration token will be downloaded.

Running the VCS agent»

Download the VCS agent binaries»

The latest version of the VCS agent binaries for Linux are available at Spacelift's CDN:

Binaries for other operating systems are available on the GitHub Releases page.

Checksum verification»

1 2 3 4 5 6 7 8 9 10 11 12 | |

Run via Docker»

The VCS Agent is also available as a multi-arch (amd64 and arm64) Docker image:

public.ecr.aws/spacelift/vcs-agent:latestpublic.ecr.aws/spacelift/vcs-agent:<version>

The available versions are listed on the GitHub Releases page.

1 2 3 4 | |

Run the VCS Agent inside a Kubernetes Cluster»

We have a VCS Agent Helm Chart that you can use to install the VCS agent on top of your Kubernetes Cluster. After creating a VCS agent pool in Spacelift and generating a token, you can add our Helm chart repo and update your local cache using:

1 2 | |

Assuming your token, VCS endpoint and vendor are stored in the SPACELIFT_VCS_AGENT_POOL_TOKEN, SPACELIFT_VCS_AGENT_TARGET_BASE_ENDPOINT, and SPACELIFT_VCS_AGENT_VENDOR environment

variables, you can install the chart using the following command:

1 | |

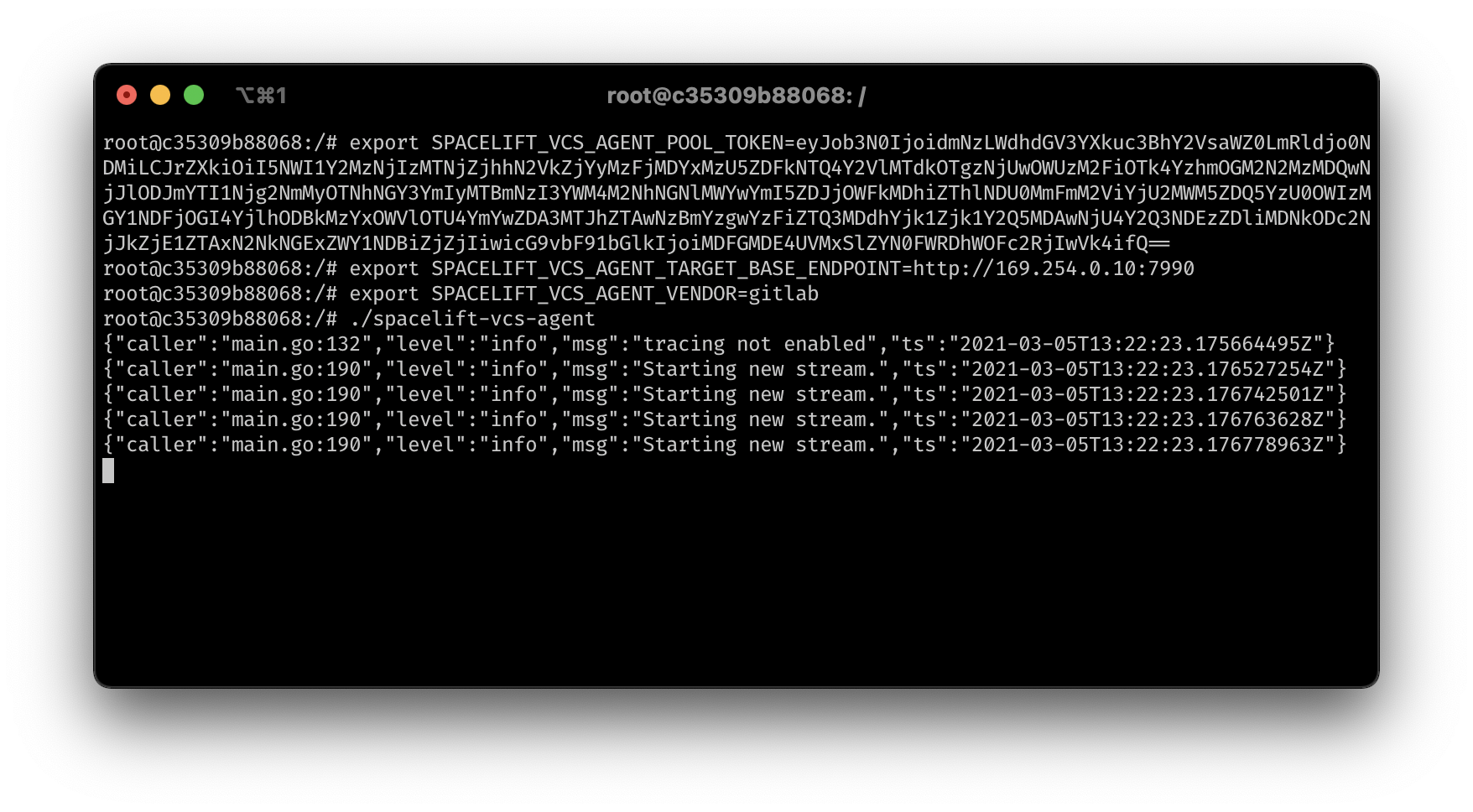

Configure and run the VCS agent»

A number of configuration variables is available to customize how your VCS Agent behaves.

| CLI Flag | Environment Variable | Status | Default Value | Description |

|---|---|---|---|---|

--target-base-endpoint |

SPACELIFT_VCS_AGENT_TARGET_BASE_ENDPOINT |

Required | The internal endpoint of your VCS system, including the protocol, as well as port, if applicable. (e.g., http://169.254.0.10:7990) |

|

--token |

SPACELIFT_VCS_AGENT_POOL_TOKEN |

Required | The token you’ve received from Spacelift during VCS Agent Pool creation | |

--vendor |

SPACELIFT_VCS_AGENT_VENDOR |

Required | The vendor of your VCS system. Currently available options are azure_devops, gitlab, bitbucket_datacenter and github_enterprise |

|

--allowed-projects |

SPACELIFT_VCS_AGENT_ALLOWED_PROJECTS |

Optional | .* |

Regexp matching allowed projects for API calls. Projects are in the form: 'group/repository'. |

--bugsnag-api-key |

SPACELIFT_VCS_AGENT_BUGSNAG_API_KEY |

Optional | Override the Bugsnag API key used for error reporting. | |

--parallelism |

SPACELIFT_VCS_AGENT_PARALLELISM |

Optional | 4 |

Number of streams to create. Each stream can handle one request simultaneously. |

--debug-print-all |

SPACELIFT_VCS_AGENT_DEBUG_PRINT_ALL |

Optional | false |

Makes vcs-agent print out all the requests and responses. |

HTTPS_PROXY |

Optional | Hostname or IP address of the proxy server, including the protocol, as well as port, if applicable. (e.g., http://10.10.1.1:8888) |

||

NO_PROXY |

Optional | Comma-separated list of host names that shouldn't go through any proxy is set in. |

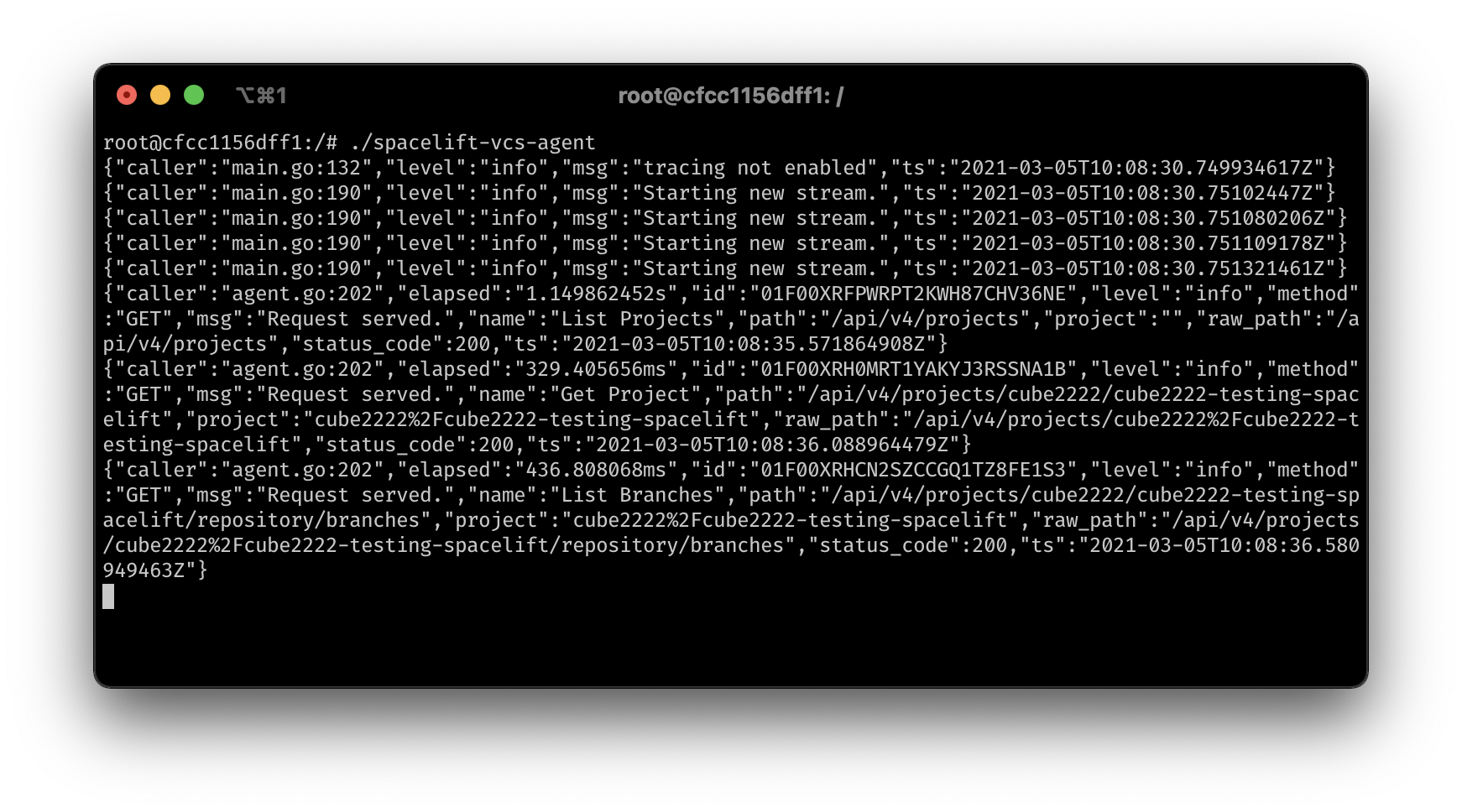

Once all required configuration is complete, your VCS agent should connect to the Spacelift backend and start handling connections.

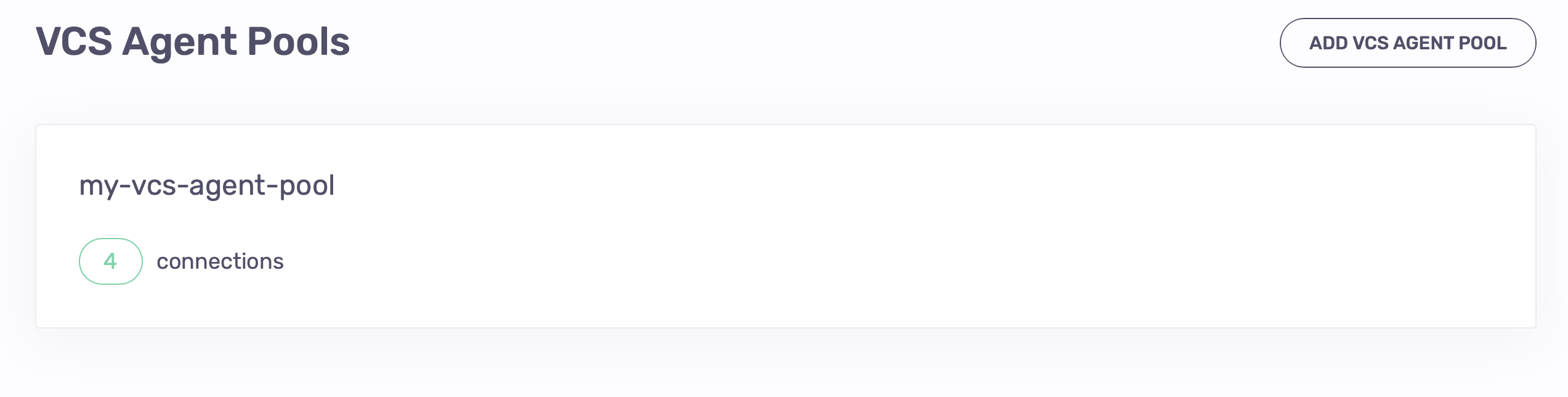

The VCS Agent Pools page displays the number of active connections used by your pool.

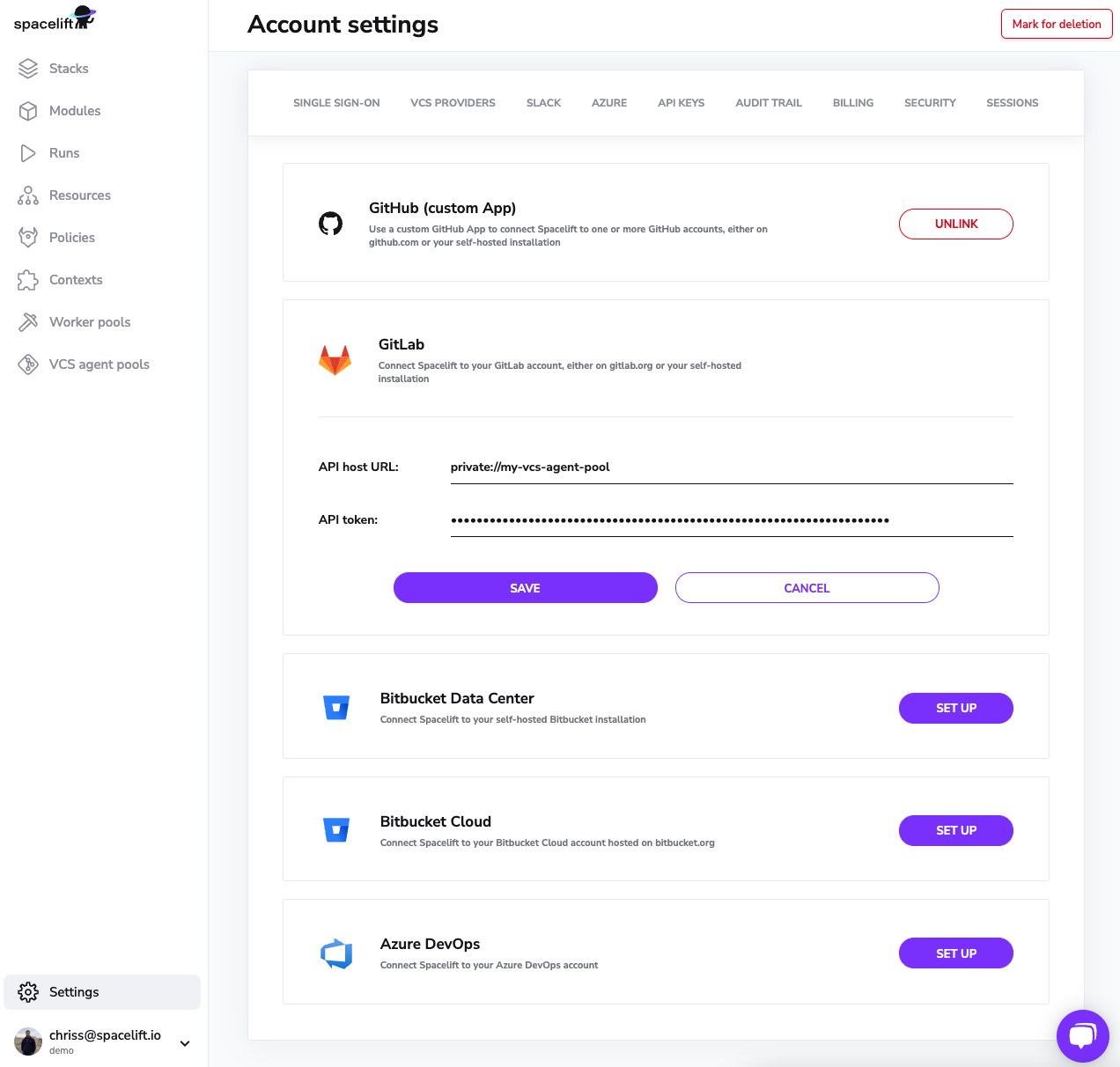

Whenever you need to specify an endpoint inside of Spacelift that should use your VCS agent pool, you should write it this way: private://<vcs-agent-pool-name>/possible/path

When trying to use this integration, such as by opening the stack creation form, you'll get a detailed log of the requests:

Configure direct network access»

Tip

VCS agents are intended for version control systems (VCS) that cannot be accessed over the internet from the Spacelift backend.

If your VCS can be accessed over the internet, possibly after allowing the Spacelift backend IP addresses, then you do not need to use VCS agents.

When using private workers with a privately accessible version control system, your private workers need direct network access to your VCS.

Additionally, you need to inform the private workers of the target network address for each of your VCS agent pools by setting up the following variables:

SPACELIFT_PRIVATEVCS_MAPPING_NAME_<NUMBER>: Name of the VCS agent pool.SPACELIFT_PRIVATEVCS_MAPPING_BASE_ENDPOINT_<NUMBER>: IP address or hostname, with protocol, for the VCS system.

There can be multiple VCS systems, so replace <NUMBER> with an integer. Start from 0 and increment it by one for each new VCS system.

Here is an example that configures access to two VCS systems:

1 2 3 4 | |

See the VCS Agents section in the Kubernetes workers docs for information on how to configure VCS agents with Kubernetes-based workers.

Worker pool settings»

VCS agents are only supported when using private worker pools.

Since your source code is downloaded directly by Spacelift workers, you need to configure them to directly access your VCS instance.

Passing metadata tags»

When the VCS agent from a VCS agent pool is connecting to the gateway, you can send some tags that will allow you to uniquely identify the process or machine for the purpose of debugging. Any environment variables using SPACELIFT_METADATA_ prefix will be passed on.

For example, if you're running Spacelift VCS Agents in EC2, you can do the following just before you execute the VCS Agent binary:

1 | |

This will set your EC2 instance ID as instance_id tag in your VCS agent connections.

Debug information»

Sometimes, it is helpful to display additional information to troubleshoot an issue. When that is needed, set the following environment variables:

1 2 3 | |

You may want to tweak the values to increase or decrease verbosity.