Stack settings»

Many settings can be configured directly on the stack to influence how runs and tasks within a stack are processed. Other factors that influence runs and tasks include:

- Environment variables

- Attached contexts

- Runtime configuration

- Integrations

Video Walkthrough»

Common settings»

You can configure these settings when you first create a stack or when it's already created. If you're editing an existing stack's settings:

- Navigate to the Stacks page in Spacelift.

- Click the three dots beside a stack name you want to configure.

- Click Settings, then click Behavior.

- Make your adjustments and click Save.

Administrative»

Deprecated - Use stack role attachments instead

The administrative flag is deprecated and will be automatically disabled on June 1st, 2026. On that date, Spacelift will automatically attach the Space Admin role to each stack's own space, which is 100% backward compatible but does not provide advanced features.

We recommend migrating as soon as possible to stack role attachments to take advantage of:

- Cross-space access: Access sibling spaces, not just your own space and subspaces

- Fine-grained permissions: Use custom roles with specific actions instead of full Space Admin permissions

- Enhanced audit trail: Role information included in webhook payloads

To replicate the current behavior, attach the Space Admin role to the stack using its own space as the binding space. See the migration guide for detailed instructions.

This legacy setting determines whether a stack has administrative privileges within its space. Administrative stacks receive an API token that grants them elevated access to a subset of the Spacelift API, which is used by the Terraform provider. This allows them to create, update, and destroy Spacelift resources.

Info

Administrative stacks get the Admin role in the space they belong to and all of its subspaces.

Administrative stacks can declaratively manage other stacks, their environments, contexts, policies, modules, and worker pools. This approach helps avoid manual configuration, often referred to as "ClickOps."

You can also export stack outputs as a context to avoid exposing the entire state through Terraform's remote state or external storage mechanisms like AWS Parameter Store or Secrets Manager.

Autodeploy»

When Autodeploy is enabled (true), any change to the tracked branch will be automatically applied if the planning phase is successful and there are no plan policy warnings.

You might consider enabling Autodeploy if you always perform an automated code review before merging to the tracked branch or if you rely on plan policies to flag potential issues. If every change undergoes a meaningful human review by stack writers, requiring an additional step to confirm deployment may be unnecessary.

When Autodeploy is enabled, approval policies are only evaluated during the queued stage, not in the unconfirmed state.

Autoretry»

This setting determines whether obsolete proposed changes are retried automatically. When Autoretry is enabled (true), any Pull Requests to the tracked branch that conflict with an applied change will be reevaluated based on the updated state.

This feature saves you from manually retrying runs on Pull Requests when the state changes. It also provides greater confidence that the proposed changes will match the actual changes after merging the Pull Request.

Autoretry is only supported for stacks with a private worker pool attached.

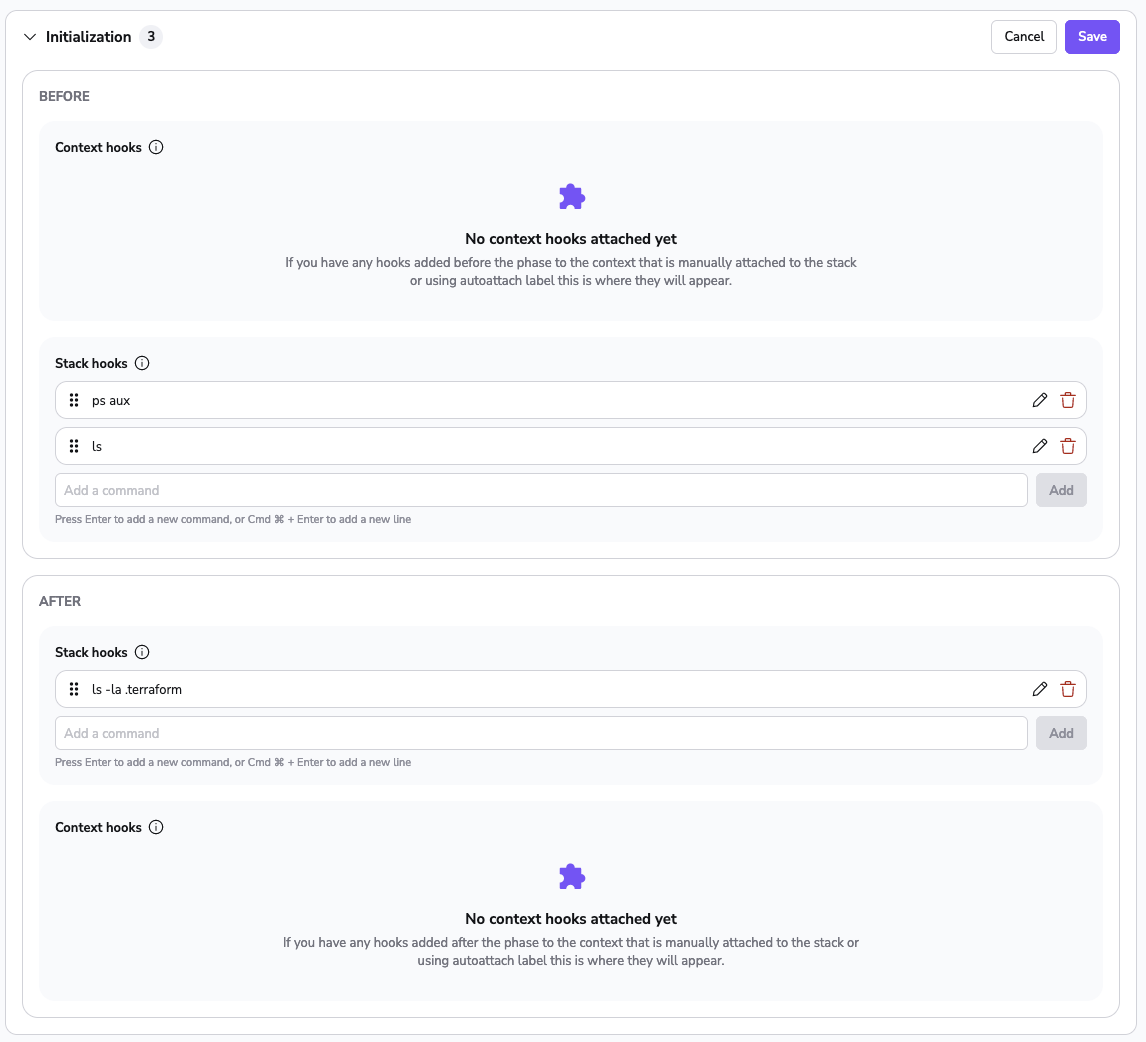

Customizing workflow»

Spacelift workflows can be customized by adding extra commands to be executed before and after specific phases:

- Initialization (

before_initandafter_init) - Planning (

before_planandafter_plan) - Applying (

before_applyandafter_apply) - Destroying (

before_destroyandafter_destroy)- Used during module test cases

- Used by stacks during destruction with corresponding stack_destructor_resource

- Performing (

before_performandafter_perform) - Finally (

after_run): Executed after each actively processed run, regardless of its outcome. These hooks have access to an environment variable calledTF_VAR_spacelift_final_run_state, which indicates the final state of the run.

All hooks, including after_run, execute on the worker. If the run is terminated outside the worker (e.g., canceled or discarded), or if there is an issue setting up the workspace or starting the worker container, the hooks will not fire.

Info

If a "before" hook fails (non-zero exit code), the corresponding phase will not execute. Similarly, if a phase fails, none of the "after" hooks will execute unless the hook uses a semicolon (;).

These commands can serve two main purposes: modifying the workspace (such as setting up symlinks or moving files) or running validations using tools like tfsec, tflint, or terraform fmt.

How to run multiple commands»

Avoid using newlines (\n) in hooks. Spacelift chains commands with double ampersands (&&), and wraps each hook in curly brackets ({}) to avoid ambiguous behavior. Using newlines can hide non-zero exit codes if the last command in the block succeeds. To run multiple commands, either add multiple hooks or use a script as a mounted file and call it in the hook.

Additionally, using a semicolon (;) in hooks will cause subsequent commands to run even if the phase fails. Use && or wrap your hook in parentheses to ensure "after" commands only execute if the phase succeeds.

Warning

When a run resumes after being paused (e.g., for confirmation or approval), the remaining phases run in a new container. Any tools installed in earlier phases will not be available. To avoid this, bake the tools into a custom runner image.

The workflow can be customized using either the Terraform provider or the GUI. The GUI provides an intuitive editor that allows you to add, remove, and reorder commands using drag-and-drop functionality. Commands preceding a phase are "before" hooks, while those following it are "after" hooks:

Commands run in the same shell session as the phase itself, so the phase will have access to any shell variables exported by preceding scripts. Environment variables are preserved across phases.

Info

These scripts can be overridden by the runtime configuration specified in the .spacelift/config.yml file.

Note on hook order»

Hooks added to stacks and contexts attached to them follow distinct ordering principles.

- Stack hooks are organized through a drag-and-drop mechanism.

- Context hooks adhere to prioritization based on context priority

- Auto-attached contexts are arranged alphabetically or reversed alphabetically depending on the operation type (before/after).

Hooks from manually and auto-attached contexts can only be edited from their respective views.

In the before phase, hook priorities work as follows:

- context hooks (based on set priorities)

- context auto-attached hooks (reversed alphabetically)

- stack hooks

In the after phase, hook priorities work as follows:

- stack hooks

- context auto-attached hooks (alphabetically)

- context hooks (reversed priorities)

Let's suppose you have 4 contexts attached to a stack:

- context_a (auto-attached)

- context_b (auto-attached)

- context_c (priority 0)

- context_d (priority 5)

In all of these contexts, we have added hooks that echo the context name before and after phases. To add to this, we will also add two static hooks on the stack level that will do a simple "echo stack".

Before phase order:

- context_c

- context_d

- context_b

- context_a

- stack

After phase order:

- stack

- context_a

- context_b

- context_d

- context_c

Runtime commands»

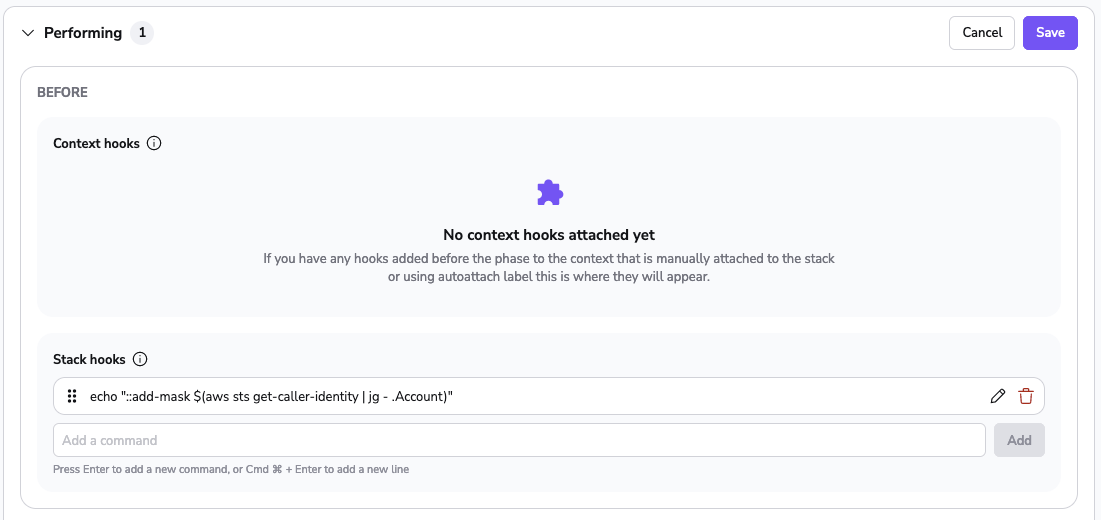

Spacelift can handle special commands to change the workflow behavior. Runtime commands use the echo command in a specific format. You can use those commands in any lifecycle step of the workflow.

1 | |

Below is a list of supported commands. See the more detailed doc after this table.

| Command | Description |

|---|---|

::add-mask |

Adds a set of values that should be masked in log output |

::add-mask»

When you mask a value, it is treated as a secret and will be redacted in the logs output. Each masked word separated by whitespace is replaced with five * characters.

Example»

1 2 3 4 5 | |

Enable local preview»

Indicates whether creating proposed Runs based on user-uploaded local workspaces is allowed.

If this is enabled, you can use spacectl to create a proposed run based on the directory you're in:

1 | |

Warning

Use this setting with caution, as it allows anybody with write access to the Stack to execute arbitrary code with access to all the environment variables configured in the Stack.

Enable well known secret masking»

This setting determines if secret patterns will be automatically redacted from logs. If enabled, the following secrets will be masked from logs:

- AWS Access Key Id

- Azure AD Client Secret

- Azure Connection Strings (

AccountKey=...,SharedAccessSignature=...) - GitHub PAT

- GitHub Fine-Grained PAT

- GitHub App Token

- GitHub Refresh Token

- GitHub OAuth Access Token

- JWT tokens

- Slack Token

- PGP Private Key

- RSA Private Key

- PEM block with BEGIN PRIVATE KEY header

Name and description»

Stack name and description are pretty self-explanatory. The required name is what you'll see in the stack list on the home screen and menu selection dropdown. Make sure that it's informative enough to be able to immediately communicate the purpose of the stack, but short enough so that it fits nicely in the dropdown, and no important information is cut off.

The optional description is completely free-form and it supports Markdown. This is a good place for a thorough explanation of the purpose of the stack and a link or two.

Tip

Based on the original name, Spacelift generates an immutable slug that serves as a unique identifier of this stack. If the name and the slug diverge significantly, things may become confusing.

Even though you can change the stack name at any point, we strongly discourage all non-trivial changes.

Labels»

Labels are arbitrary, user-defined tags that can be attached to Stacks. A single Stack can have an arbitrary number of these, but they must be unique. Labels can be used for any purpose, including UI filtering, but one area where they shine most is user-defined policies which can modify their behavior based on the presence (or lack thereof) of a particular label.

There are some magic labels that you can add to your stacks. These labels add/remove functionalities based on their presence.

List of the most useful labels:

- infracost -- Enables Infracost on your stack

- feature:enable_log_timestamps -- Enables timestamps on run logs.

- feature:add_plan_pr_comment -- Enables Pull Request Plan Commenting. Please use Notification policies instead.

- feature:disable_pr_comments -- Disables Pull Request Comments

- feature:disable_pr_delta_comments -- Disables Pull Request Delta Comments (The default change summary)

- feature:disable_resource_sanitization -- Disables resource sanitization

- feature:enable_git_checkout -- Enables support for downloading source code using standard Git checkout rather than downloading a tarball via API

- feature:aws_oidc_session_tagging -- Enables AWS session tagging when using OIDC

- feature:ignore_runtime_config -- Ignores .spacelift/config

- terragrunt -- Old way of using Terragrunt from the Terraform backend

- ghenv: Name -- GitHub Deployment environment (defaults to the stack name)

- ghenv: - -- Disables the creation of GitHub deployment environments

- autoattach:autoattached_label -- Used for policies/contexts/integrations to autoattach the policy/context/integration to all stacks containing

autoattached_label - feature:k8s_keep_using_prune_white_list_flag -- sets

--prune-whitelistflag instead of--prune-allowlistfor the template parameter.PruneWhiteListin the Kubernetes custom workflow. - feature:pr_enforce_unique_module_version -- Enforces module version to be unique even for PR checks

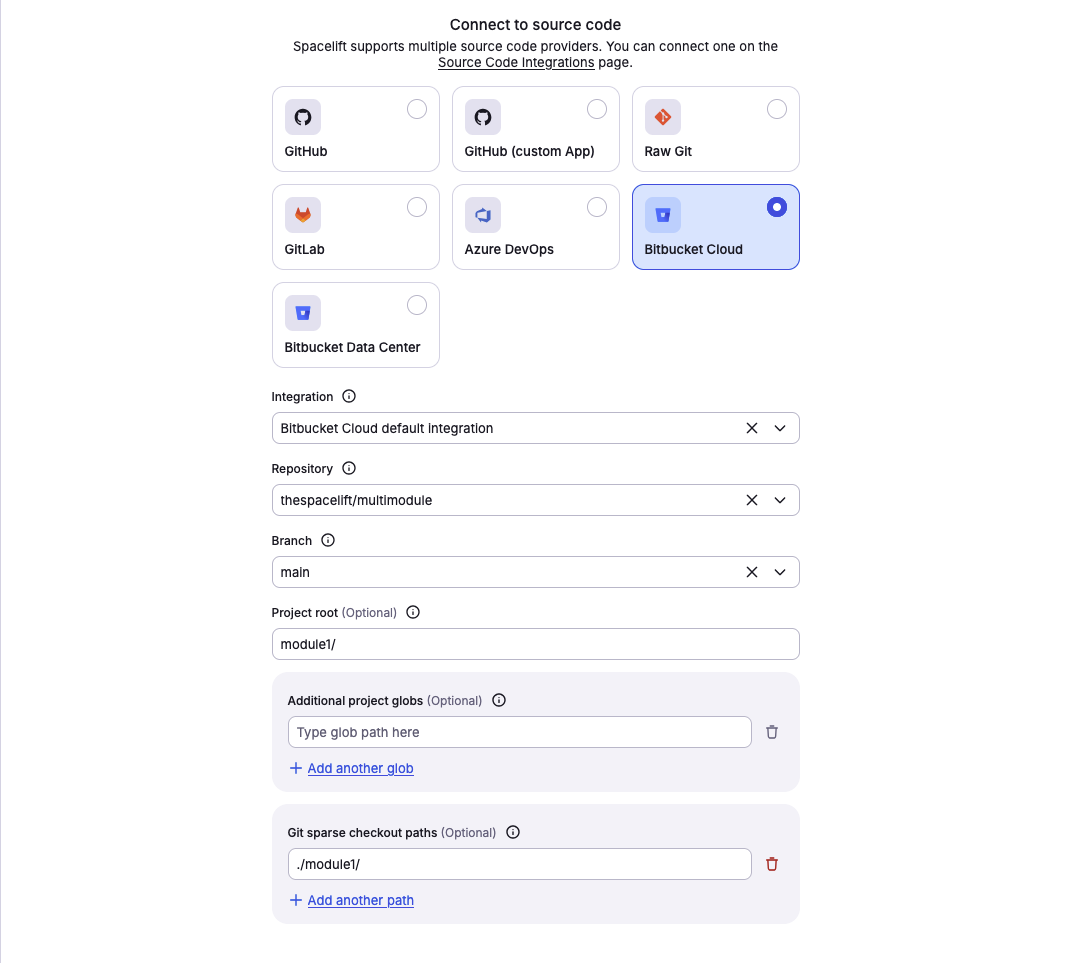

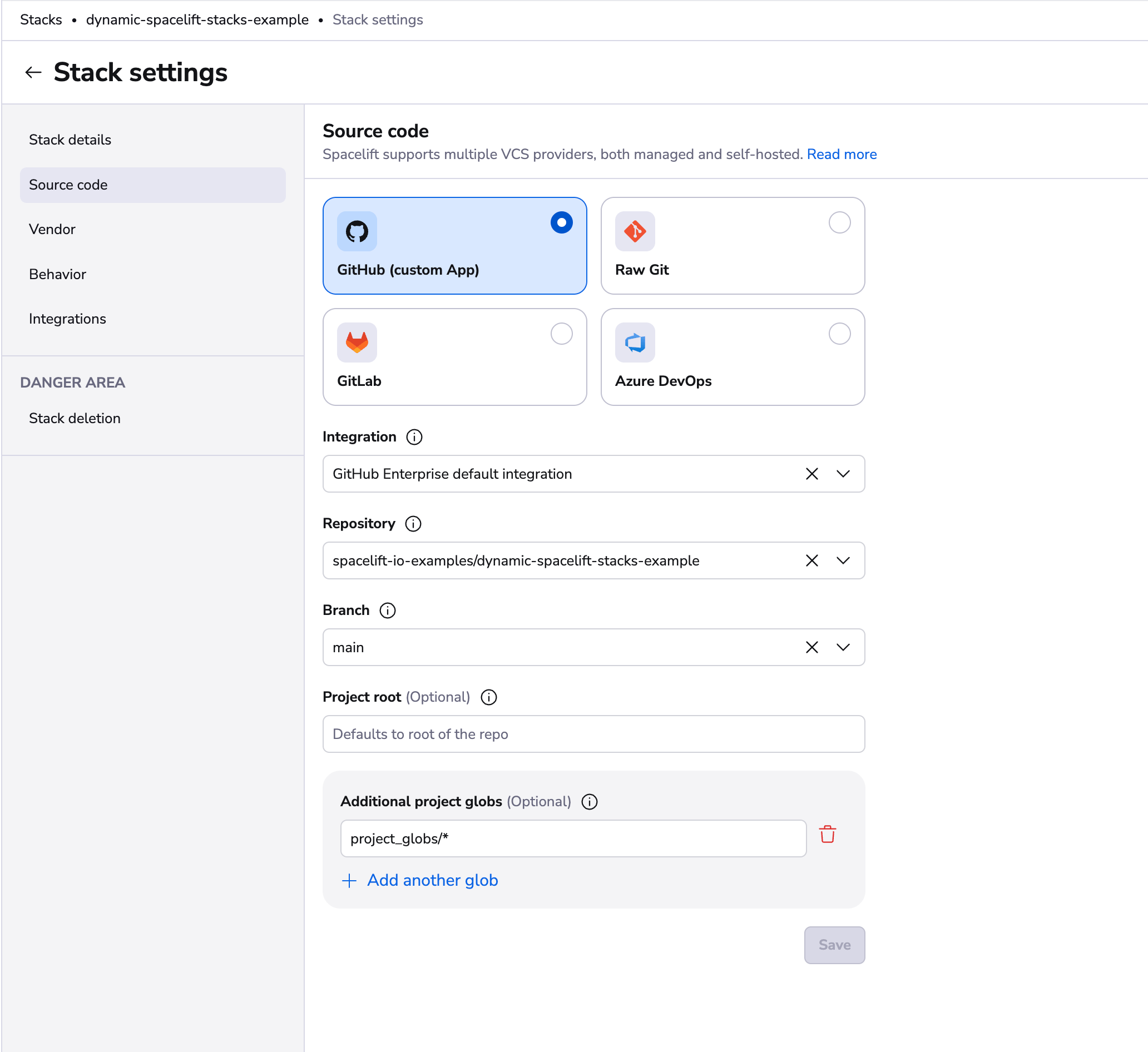

Project root»

Project root points to the directory within the repo where the project should start executing. This is especially useful for monorepos or repositories hosting multiple somewhat independent projects. This setting plays very well with Git push policies, allowing you to easily express generic rules on what it means for the stack to be affected by a code change. In the absence of push policies, any changes made to the project root and any paths specified by project globs will trigger Spacelift runs.

Info

The project root can be overridden by the runtime configuration specified in the .spacelift/config.yml file.

Git sparse checkout paths»

Git sparse checkout paths allow you to specify a list of directories and files that will be used in sparse checkout, meaning that only the specified directories and files from the list will be cloned. This can help reduce the size of the workspace by only downloading the parts of the repository that are needed for the stack.

Only path values are allowed; glob patterns are not supported.

Example valid paths:

./infrastructure/infrastructure/infrastructure./infrastructure/main.tf

Example invalid path (glob pattern):

./infrastructure/*

Project globs»

The project globs option allows you to specify files and directories outside of the project root that the stack cares about. In the absence of push policies, any changes made to the project root and any paths specified by project globs will trigger Spacelift runs.

Warning

Project globs do not mount the files or directories in your project root. They are used primarily for triggering your stack when, for example, there are changes to a module outside of the project root.

You aren't required to add any project globs if you don't want to, but you have the option to add as many project globs as you want for a stack.

Under the hood, the project globs option takes advantage of the doublestar.Match function to do pattern matching.

Example matches:

- Any directory or file:

** - A directory and all of its content:

dir/* - A directory path and all of its subdirectories and files:

dir/** - Match all files with a specific extension:

dir/*.tf - Match all files that start with a string, end with another and have a predefined number of chars in the middle --

data-???-reportwill match three chars between data and report - Match all files that start with a string, and finish with any character from a sequence:

dir/instance[0-9].tf

As you can see in the example matches, these are the regex rules that you are already accustomed to.

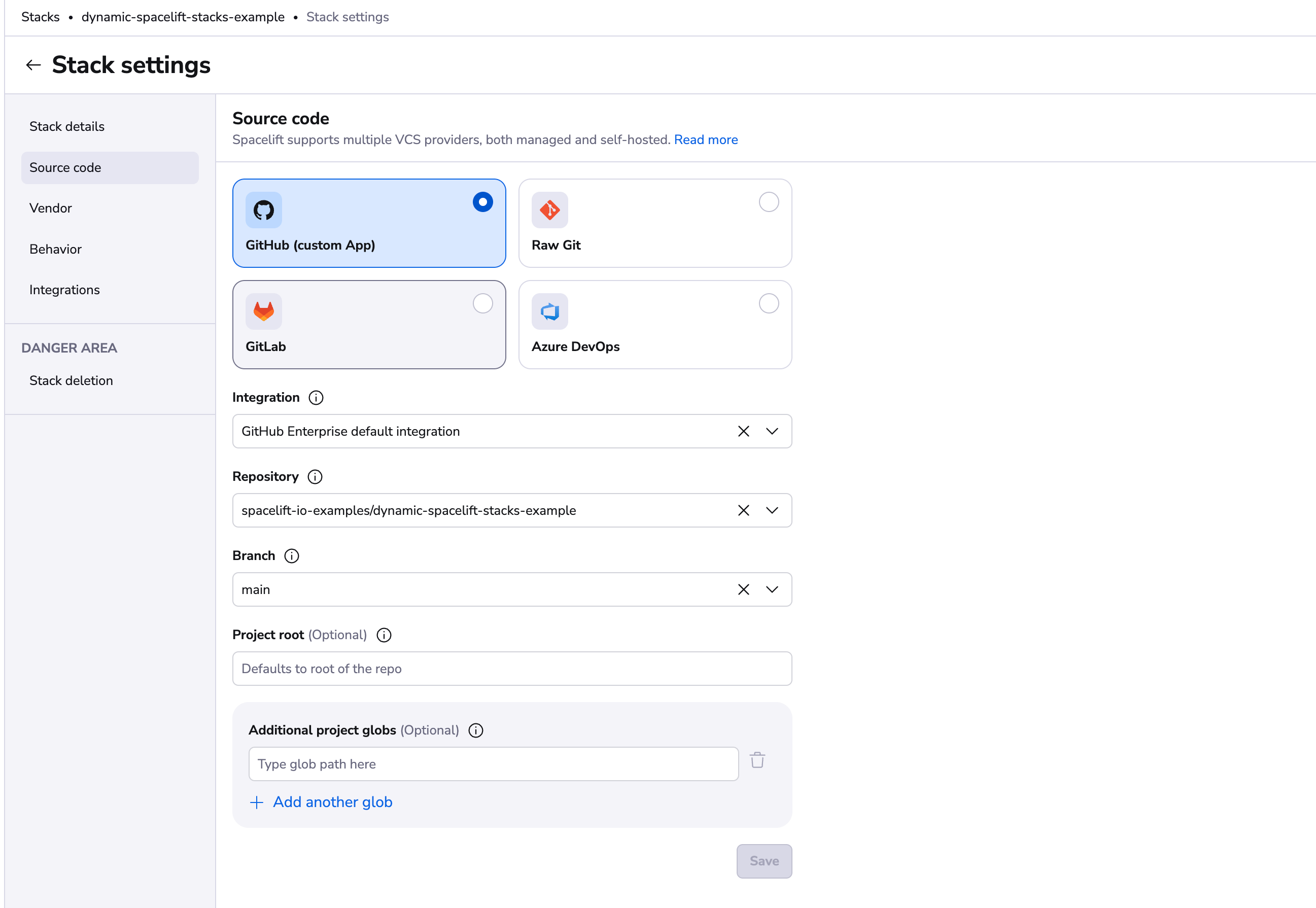

VCS integration and repository»

We have two types of integrations types: default and Space-level. Default integrations will be always available for all stacks, and Space-level integrations will be available only for stacks that are in the same Space as the integration or have access to it via inheritance. Read more about VCS integrations in the source control page.

Repository and branch point to the location of the source code for a stack. The repository must either belong to the GitHub account linked to Spacelift (its choice may further be limited by the way the Spacelift GitHub app has been installed) or to the GitLab server integrated with your Spacelift account. For more information about these integrations, please refer to our GitHub and GitLab documentation.

Thanks to the strong integration between GitHub and Spacelift, the link between a stack and a repository can survive the repository being renamed in GitHub. If you're storing your repositories in GitLab, then you need to make sure to manually (or programmatically using Terraform) point the stack to the new location of the source code.

Info

Spacelift does not support moving repositories between GitHub accounts, since Spacelift accounts are strongly linked to GitHub ones. In that case the best course of action is to take your Terraform state, download it and import it while recreating the stack (or multiple stacks) in a different account. After that, all the stacks pointing to the old repository can be safely deleted.

Moving a repository between GitHub and GitLab or the other way around is simple, however. Just change the provider setting on the Spacelift project, and point the stack to the new source code location.

Branch signifies the repository branch tracked by the stack. By default, unless a Git push policy explicitly determines otherwise, a commit pushed to the tracked branch triggers a deployment represented by a tracked run. A push to any other branch by default triggers a test represented by a proposed run. Learn more about git push policies, tracked branches, and head commits.

Results of both tracked and proposed runs are displayed in the source control provider using their specific APIs. Refer to our GitHub and GitLab documentation to understand how Spacelift feedback is provided for your infrastructure changes.

Info

A branch must exist before it's pointed to in Spacelift.

Runner image»

Since every Spacelift job (which we call a run) is executed in a separate Docker container, setting a custom runner image provides a convenient way to prepare the exact runtime environment your infra-as-code flow is designed to use.

Additionally, for our Pulumi integration, overriding the default runner image is the canonical way of selecting the exact Pulumi version and its corresponding language SDK.

Info

Runner image can be overridden by the runtime configuration specified in the .spacelift/config.yml file.

On the public worker pool, Docker images can only be pulled from allowed registries. On private workers, images can be stored in any registry, including self-hosted ones.

Worker pool»

Use this setting to choose which worker pool to use. The default is public workers.

OpenTofu/Terraform-specific settings»

Version»

The OpenTofu/Terraform version is set when a stack is created to indicate the version of OpenTofu/Terraform that will be used with this project. However, Spacelift covers the entire Terraform version management story, and applying a change with a newer version will automatically update the version on the stack.

Workspace»

OpenTofu workspaces and Terraform workspaces are supported by Spacelift, too, as long as your state backend supports them. If the workspace is set, Spacelift will try to first select, and then (should that fail) automatically create the required OpenTofu/Terraform workspace on the state backend.

If you're managing your OpenTofu/Terraform state through Spacelift, the workspace argument is ignored since Spacelift gives each stack a separate workspace by default.

Pulumi-specific settings»

Login URL»

Login URL is the address Pulumi should log into during Run initialization. Since we do not yet provide a full-featured Pulumi state backend, you need to bring your own (e.g. Amazon S3).

You can read more about the login process and a general explanation of Pulumi state management and backends.

Stack name»

The name of the Pulumi stack, which should be selected for backend operations. Please do not confuse it with the Spacelift stack name. They may be different, but it's useful to keep them identical.