Deploying to EKS»

This guide provides a way to quickly get Spacelift up and running on an Elastic Kubernetes Service (EKS) cluster. In this guide we show a relatively simple networking setup where Spacelift is accessible via a public load balancer, but you can adjust this to meet your requirements as long as you meet the basic networking requirements for Spacelift.

To deploy Spacelift on EKS you need to take the following steps:

- Deploy your basic infrastructure components.

- Push the Spacelift images to your Elastic Container Registry.

- Deploy the Spacelift backend services using our Helm chart.

Overview»

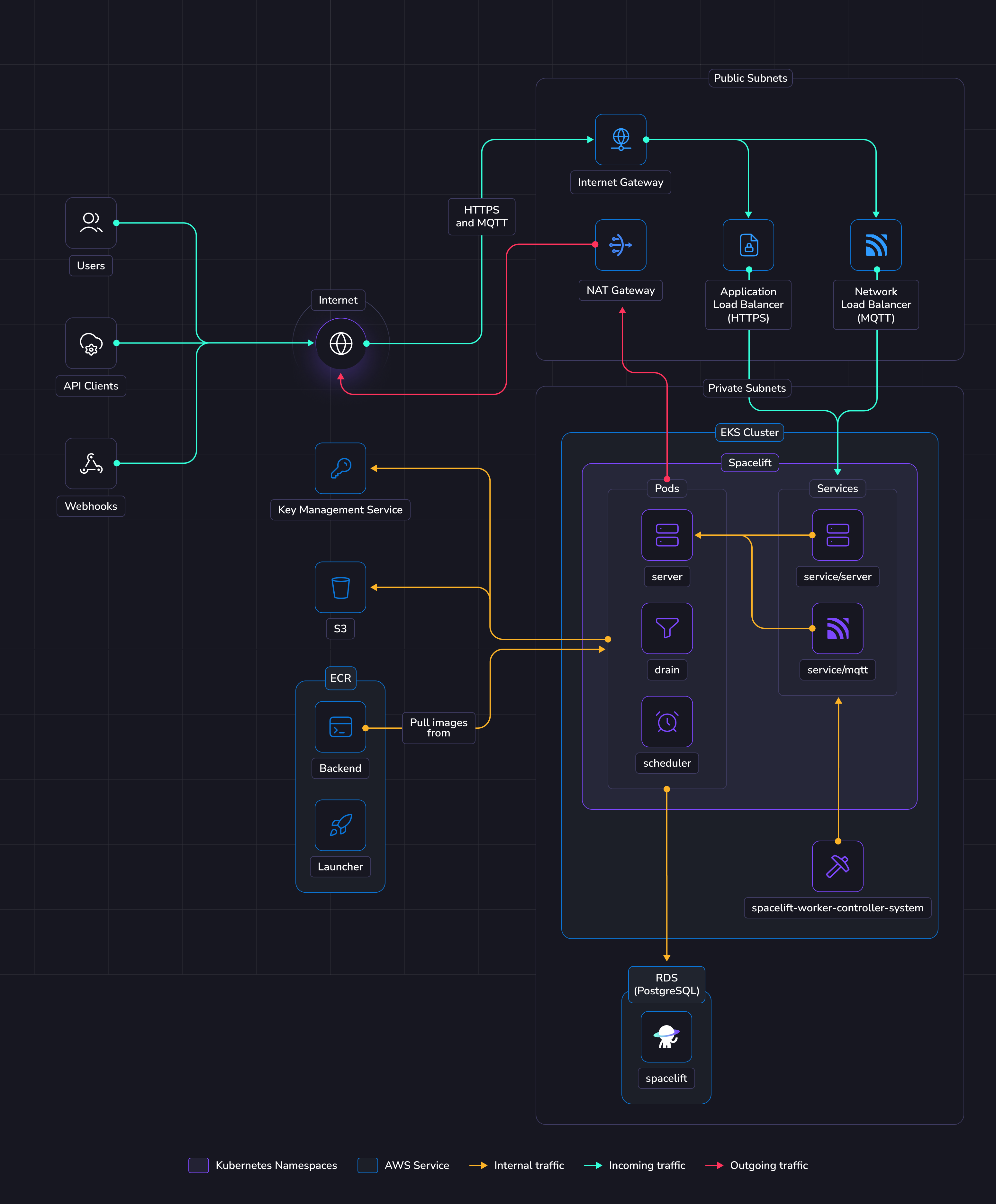

The illustration below shows what the infrastructure looks like when running Spacelift in EKS.

Networking»

Info

More details regarding networking requirements for Spacelift can be found on this page.

This section will solely focus on how the EKS infrastructure will be configured to meet Spacelift's requirements.

In this guide we'll create a new VPC with public and private subnets. The public subnets will contain the following items to allow communication between Spacelift and the external internet:

- An Application Load Balancer to allow inbound HTTPS traffic to reach the Spacelift server instances. This load balancer will be created automatically via a Kubernetes Ingress.

- An Internet Gateway to allow inbound access to the load balancer.

- A NAT Gateway to allow egress traffic from the Spacelift services.

The private subnets contain the Spacelift RDS Postgres database, along with the Spacelift Kubernetes pods and are not directly accessible via the internet.

Object Storage»

The Spacelift instance needs an object storage backend to store Terraform state files, run logs, and other things. Several S3 buckets will be created in this guide. This is a hard requirement for running Spacelift.

Spacelift uses the AWS SDK default credential provider chain for S3 authentication, supporting environment variables, shared credential files and IAM roles for EKS. More details about object storage requirements and authentication can be found here.

Database»

Spacelift requires a PostgreSQL database to operate. In this guide we'll create a new Aurora Serverless RDS instance. You can also reuse an existing instance and create a new database in it. In that case you'll have to adjust the database URL and other settings across the guide. It's also up to you to configure appropriate networking to expose this database to Spacelift's VPC.

You can switch the create_database option to false in the terraform module to disable creating an RDS instance.

More details about database requirements for Spacelift can be found here.

EKS»

In this guide, we'll create a new EKS cluster to run Spacelift. The EKS cluster will use a very simple configuration including an internet-accessible public endpoint.

The following services will be deployed as Kubernetes pods:

- The scheduler.

- The drain.

- The server.

The scheduler is the component that handles recurring tasks. It creates new entries in a message queue when a new task needs to be performed.

The drain is an async background processing component that picks up items from the queue and processes events.

The server hosts the Spacelift GraphQL API, REST API and serves the embedded frontend assets. It also contains the MQTT server to handle interactions with workers. The server is exposed to the outside world using an Application Load Balancer for HTTP traffic, and a Network Load Balancer for MQTT traffic.

Workers»

In this guide Spacelift workers will also be deployed in EKS. That means that your Spacelift runs will be executed in the same environment as the app itself (we recommend using another K8s namespace).

If you want to run workers outside the EKS cluster created for Spacelift, you can set the mqtt_broker_domain option of the Terraform module below. In order for this to work, you will also need to perform some additional tasks like setting up a DNS record for the MQTT broker endpoint, and making the launcher image available to your external workers if you wish to run them in Kubernetes. If you're unsure what this entails, we recommend that you stick with the default option.

We highly recommend running your Spacelift workers within the same cluster, in a dedicated namespace. This simplifies the infrastructure deployment and makes it more secure since your runs are executed in the same environment.

Requirements»

Before proceeding with the next steps, the following tools must be installed on your computer.

- AWS CLI v2.

- Docker.

- Helm.

- OpenTofu or Terraform.

Info

In the following sections of the guide, OpenTofu will be used to deploy the infrastructure needed for Spacelift. If you are using Terraform, simply swap tofu for terraform.

Server certificate»

Spacelift should run under an HTTPS endpoint, so you need to provide a valid certificate to the Ingress resource deployed by Spacelift. In this guide we will assume that you already have an ACM certificate for the domain that you wish to host Spacelift on.

Warning

Please note, your certificate must be in the Issued status before you will be able to access Spacelift.

Deploy infrastructure»

We provide a Terraform module to deploy Spacelift's infrastructure requirements.

Some parts of the module can be customized to avoid deploying parts of the infra in case you want to handle that yourself. For example, you may want to disable the database if you already have a Postgres instance and want to reuse it.

Note

If you want to reuse an existing cluster, you can read this section of the EKS module. You'll need to deploy all the infrastructure dependencies (object storage and database) and make sure your cluster is able to reach them.

Before you start, set a few environment variables that will be used by the Spacelift modules:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | |

Note

The admin login/password combination is only used for the very first login to the Spacelift instance. It can be removed after the initial setup. More information can be found in the initial setup section.

Below is an example of how to use this module:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 | |

EKS Upgrade Policy

The eks_upgrade_policy determines how your EKS cluster handles Kubernetes version upgrades when standard support ends.

- STANDARD: Automatically upgrades to the next Kubernetes version when the 14-month standard support period ends. This option provides more frequent security patches and bug fixes at a lower cost.

- EXTENDED (AWS default): Prevents automatic upgrades and keeps the cluster on the current version for an additional 12 months after standard support ends. This incurs higher costs and receives fewer updates.

By explicitly setting support_type = "STANDARD" in the example above, you're opting out of AWS's default extended support behavior.

Important: You can only change the upgrade policy while your cluster is running a Kubernetes version in standard support. Once a cluster version enters extended support, you cannot change the policy until you upgrade to a standard-supported version. For more details, see AWS EKS extended support documentation.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

Feel free to take a look at the documentation for the terraform-aws-eks-spacelift-selfhosted module before applying your infrastructure in case there are any settings that you wish to adjust. Once you are ready, apply your changes:

1 | |

Once applied, you should grab all variables that need to be exported in the shell that will be used in next steps. We expose a shell output in terraform that you can source directly for convenience.

1 2 | |

Info

During this guide you'll export shell variables that will be useful in future steps. So please keep the same shell open for the entire guide.

Push images to Elastic Container Registry»

Assuming you have sourced the shell output as described in the previous section, you can run the following commands to upload the container images to your container registries and the launcher binary to the binaries S3 bucket:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Deploy Spacelift»

First, we need to configure Kubernetes credentials to interact with the EKS cluster.

1 2 3 | |

Warning

Make sure the above KUBECONFIG environment variable is present when running the helm commands later in this guide.

Create Kubernetes namespace»

1 2 3 4 5 6 7 8 | |

Info

In the command above, we're labelling the namespace with eks.amazonaws.com/pod-readiness-gate-inject. The reason

for this is to enable pod readiness gates

for the AWS load balancer controller. In addition the label key differs from the elbv2.k8s.aws/pod-readiness-gate-inject

key mentioned in the load balancer controller docs because this guide is using EKS Auto.

Pod readiness gates are optional, but can help to prevent situations where requests are routed to pods that aren't ready to handle them when using the AWS load balancer controller.

Create IngressClass»

To allow access to the Spacelift HTTP server via an AWS Application Load Balancer, we need to create an IngressClassParams and IngressClass resource to specify the subnets and ACM certificate to use. The Terraform module provides a kubernetes_ingress_class output for convenience that you can pass to kubectl apply to create the resources:

1 | |

Create secrets»

The Spacelift services need various environment variables to be configured to operate correctly. In this guide we will create three Spacelift secrets to pass these variables to the Spacelift backend services:

spacelift-shared- contains variables used by all services.spacelift-server- contains variables specific to the Spacelift server.spacelift-drain- contains variables specific to the Spacelift drain.

For convenience, the terraform-aws-eks-spacelift-selfhosted Terraform module provides a kubernetes_secrets output that you can pass to kubectl apply to create the secrets:

1 | |

To find out more about all of the configuration options that are available, please see the reference section of this documentation.

Deploy application»

You need to provide a number of configuration options to Helm when deploying Spacelift to configure it correctly for your environment. You can generate a Helm values.yaml file to use via the helm_values output variable of the terraform-aws-eks-spacelift-selfhosted Terraform module:

1 | |

Feel free to take a look at this file to understand what is being configured. Once you're happy, run the following command to deploy Spacelift:

1 2 3 4 5 6 7 | |

Tip

You can follow the deployment progress with: kubectl logs -n ${K8S_NAMESPACE} deployments/spacelift-server

Once the chart has deployed correctly, you can get the information you need to setup DNS entries for Spacelift. To get the domain name of the Spacelift application load balancer you can use the ADDRESS field from the kubectl get ingresses command, like in the following example:

1 2 3 | |

If you are using external workers, you can get the domain name of your MQTT broker from the EXTERNAL-IP field from the kubectl get services command, like in the following example:

1 2 3 | |

Configure your DNS zone»

You should now go ahead and create appropriate CNAME entries to allow access to your Spacelift and (if using external workers) MQTT addresses.

1 2 3 4 5 6 7 | |

VCS Gateway Service»

Ideally, your VCS provider should be accessible from both the Spacelift backend and its workers. If direct access is not possible, you can use VCS Agent Pools to proxy the connections from the Spacelift backend to your VCS provider.

The VCS Agent Pool architecture introduces a VCS Gateway service, deployed alongside the Spacelift backend, and exposed via a dedicated Application Load Balancer. External VCS Agents connect to this load balancer over gRPC (port 443 with TLS termination), while internal Spacelift services (server, drain) communicate with the gateway via HTTP using pod IP addresses within the cluster.

To enable this setup, add the following variables to your module configuration:

1 2 3 4 5 6 7 | |

After applying the Terraform changes, re-apply the ingress class (which now includes the VCS Gateway listener certificate), regenerate your spacelift-values.yaml from the helm_values output, and redeploy the Helm chart to enable the VCS Gateway service:

1 2 3 4 5 6 7 8 9 10 | |

Set up the DNS record for the VCS Gateway service. You can find the load balancer address from the ingress:

1 | |

Create a CNAME record pointing your VCS Gateway domain to this load balancer address:

1 | |

With the backend now configured, proceed to the VCS Agent Pools guide to complete the setup.

Next steps»

Now that your Spacelift installation is up and running, take a look at the initial installation section for the next steps to take.

Configure telemetry»

You can configure telemetry collection to monitor your installation's performance and troubleshoot issues. See our telemetry configuration guides for step-by-step instructions on setting up Datadog, OpenTelemetry with Jaeger, or OpenTelemetry with Grafana Stack.

Create a worker pool»

We recommend that you deploy workers in a dedicated namespace.

1 2 3 | |

Warning

When creating your WorkerPool, make sure to configure resources. This is highly recommended because otherwise very high resources requests can be set automatically by your admission controller.

Also make sure to deploy the WorkerPool and its secrets into the correct namespace we just created by adding -n ${K8S_WORKER_POOL_NAMESPACE} to the commands in the guide below.

➡️ You need to follow this guide for configuring Kubernetes Workers.

Deletion / uninstall»

Before running tofu destroy on the infrastructure, it is recommended to do a proper clean-up in the K8s cluster. That's because the Spacelift helm chart creates some AWS resources (such as load balancers) that are not managed by Terraform. If you do not remove them from K8s, tofu destroy will complain because some resources like networks cannot be removed if not empty.

1 2 3 | |

Note

Namespace deletions in Kubernetes can take a while or even get stuck. If that happens, you need to remove the finalizers from the stuck resources.

Before running tofu destroy on the infrastructure, you may want to set the following properties for the terraform-aws-spacelift-selfhosted module to allow the RDS, ECR and S3 resources to be cleaned up properly:

1 2 3 4 5 6 7 8 9 | |

Remember to apply those changes before running tofu destroy.