Disaster Recovery»

Spacelift Self-Hosted installations support multi-region disaster recovery (DR). This allows you to cope with an outage of an entire AWS region by failing over to a secondary region.

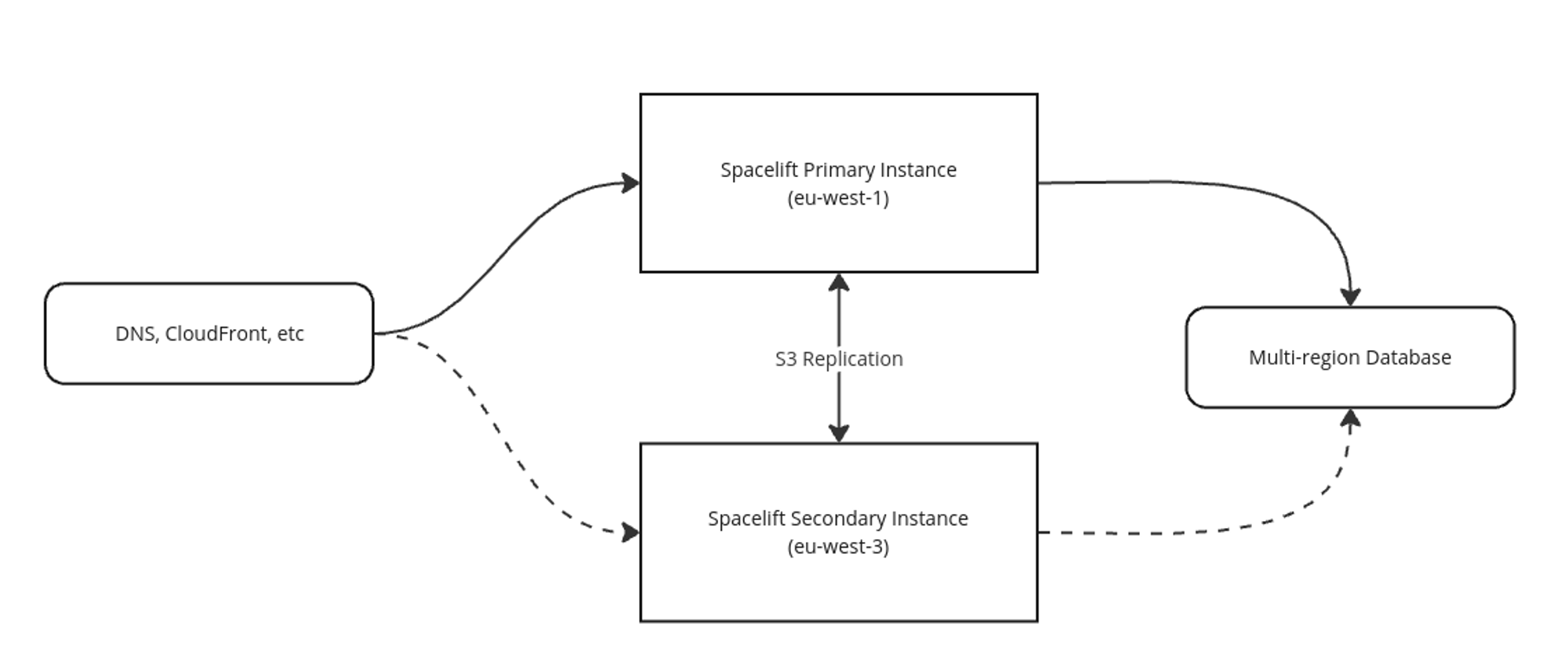

At a high level, the architecture of a multi-region DR installation of Spacelift looks like the following:

The main points to be aware of are:

- Two instances of Self-Hosted are deployed to two different AWS regions.

- S3 replication between the primary and secondary regions is used to automatically replicate things like state files, run logs and module definitions. The replication is bidirectional.

- Both regions connect to the same database instance (although only one instance needs write access at any one point in time).

- In the case of a failover, you can either update DNS records to switch from pointing at the primary to the secondary region, or you can use something like CloudFront to switch over without relying on DNS.

The rest of this page explains how to install a DR instance of Self-Hosted, how to convert an existing installation to be capable of failover, and an example runbook explaining the failover and failback processes.

Installation»

Prerequisites»

To install a DR-capable version of Self-Hosted, you must meet the following requirements:

- A Postgres 13.7 or higher database capable of being used in multiple regions, and the Self-Hosted instance configured to use a custom DB connection string.

- NOTE: the database does not need to be active-active. It just needs to be capable of being used by the failover instance in the case of a regional failover (for example, Aurora Global).

- Both instances of Self-Hosted need to be able to access your Postgres database. Because of this you will probably want to follow the Advanced Installation guide when setting up your Self-Hosted instance to allow you to configure and have full control over the VPCs that your Self-Hosted instances use.

- A custom IoT broker domain configuration along with the relevant DNS records configured to allow you to failover to your secondary region.

Steps»

To setup a DR instance of Spacelift to failover to in the case of an AWS outage in your primary region you can use the following steps:

- Deploy your primary region.

- Deploy your secondary (DR) region.

- Configure S3 replication in your primary region.

The following sections explain each step in detail, describing how to setup a Self-Hosted installation with a primary region in eu-west-1 and a secondary (DR) region in eu-west-3.

Warning

Please note that the instructions deliberately leave out details like how to setup your DNS records for Self-Hosted because this does not differ from a standard Self-Hosted installation.

Deploying primary region»

Deploy a copy of Self-Hosted as you normally would into your primary region, making sure to configure the following settings:

database.connection_string_ssm_arnanddatabase.connection_string_ssm_kms_arnto use a self-managed database capable of being used from multiple regions.iot_broker_endpointpointing at your custom IoT broker domain (for exampleworker-iot.spacelift.myorg.com).

Once the installation has completed, it will output some information about your instance:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

The key pieces of information you need for the next step are the Encryption primary key ARN and the information under the S3 bucket replication info section. Take a note of these values.

Deploying secondary region»

Deploying your secondary region is very similar to deploying your primary region. The main differences are that you will provide some DR-specific config information, and you will also provide different values for the following properties:

aws_region- set to the name of your secondary region.database.db_cluster_identifier- this is optional, and only required if you want the Spacelift CloudWatch dashboard to be deployed.database.connection_string_ssm_arn- points at a secret in your secondary region containing the database connection string.database.connection_string_ssm_kms_arn- a key available in your secondary region used to encrypt the database connection string secret.load_balancer.certificate_arn- a certificate manager TLS cert available in the secondary region.disable_services- set totruein the DR region.

The disaster_recovery section should look something like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

disaster_recovery.encryption_primary_key_arnis the Encryption primary key ARN output from installing Self-Hosted in your primary region.- Make sure that

s3_bucket_replication.enabledis set totrue, setreplica_regionto the region that your primary instance is installed into, and populate the rest of the values using the S3 bucket replication info installer output.

So for example, your config file may look similar to this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | |

Once you’re ready, run the installer just like you would for the primary region. Just point at the config file for your DR region instead:

1 | |

Once the installer completes, it will output some information that you’ll need to configure S3 replication in your primary instance:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

The information you need is all under the S3 bucket replication info section.

Enabling S3 replication from primary to secondary»

At this point, the last remaining thing to configure is S3 replication. This ensures that things like modules, run logs and state files are replicated from your primary to secondary region so that they are available in the case you need to failover.

To do this, edit the config file for your primary region and populate the .disaster_recovery.s3_bucket_replication section:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | |

Once you’ve done this, you can run the installer again to complete your DR setup:

1 | |

Migrating from an existing installation to one with DR»

This section explains how to take an existing Self-Hosted installation, and enable multi-region failover for disaster recovery purposes. For the most part this follows the same process as if you were configuring a DR installation from scratch, but some additional steps will be required to make the database available in multiple regions, and also replicate existing S3 objects.

Install the latest version of Self-Hosted»

The first step is to install v2.0.0 of Self-Hosted. As part of the installation process any encrypted secret data in your database will be migrated from a single-region to a multi-region KMS key to allow for failover. To do this, just follow the standard installation process for any Self-Hosted version, but with the following caveats:

- We strongly advise that you take a snapshot of your RDS cluster before starting the installation. The KMS migration is designed so that if the conversion of any of your secrets fail the entire process will be rolled back, but we still recommend making sure you have an up to date snapshot before starting the process.

- The upgrade to v2.0.0 involves downtime. This is to ensure that no secrets are accidentally encrypted with the existing single-region key while the migration is taking place. The downtime should be relatively brief (less than 5 minutes).

When you have finished upgrading to the latest version of Self-Hosted, note the Encryption primary key ARN in the Installation info output from the script as well as the information in the S3 bucket replication info section. You will need this information when configuring your DR instance later in this guide.

Migrating the database»

Next, migrate your database to a setup capable of being used in multiple regions, and update your Self-Hosted installation to use a custom connection string to access the database.

Info

We don't provide instructions on how to migrate your database because the exact process would depend on what you want to migrate to. As an example however, you could use an Aurora Global database and convert your existing database cluster into a global cluster capable of failover.

Configure your secondary instance and setup S3 replication»

Use the following sections of the installation guide to setup your secondary region as well as S3 replication:

Perform S3 batch replication»

At this point, your disaster recovery setup is fully configured, but because S3 replication rules don’t replicate existing objects that were created before the rules were put in place, you need to run some one-time manual batch jobs to copy the existing objects.

The following S3 buckets have replication enabled, so you will need to run a batch copy job for each:

spacelift-states- contains any Spacelift-managed state files.spacelift-run-logs- contains the logs shown for runs in the Spacelift UI.spacelift-modules- contains the code for any modules uploaded to your Terraform module registry.policy-inputs- contains any policy samples.spacelift-workspaces- used to store the temporary data used by in-progress Spacelift runs.

Creating a batch operations role»

To perform the batch replication jobs you need to define an IAM role that has permissions on both your source and destination buckets. You can use a policy like the following (although you can also adjust the policy to restrict it to the specific buckets you need to replicate):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | |

Info

Please note that the previous policy allows access to a bucket called myorg-spacelift-replication-reports-bucket. This is to allow batch operations to store a completion report for the job in case you need to investigate any failures. If you want to make use of this functionality you will need to also create a bucket for the reports and replace myorg-spacelift-replication-reports-bucket in the policy above with the name of the bucket you want to use.

Create a role with your policy attached, and use the following policy for the role’s trust relationship to allow S3 batch operations to assume the role:

1 2 3 4 5 6 7 8 9 10 11 12 | |

Running batch replication for a bucket»

You will need to perform these steps for each bucket with replication enabled.

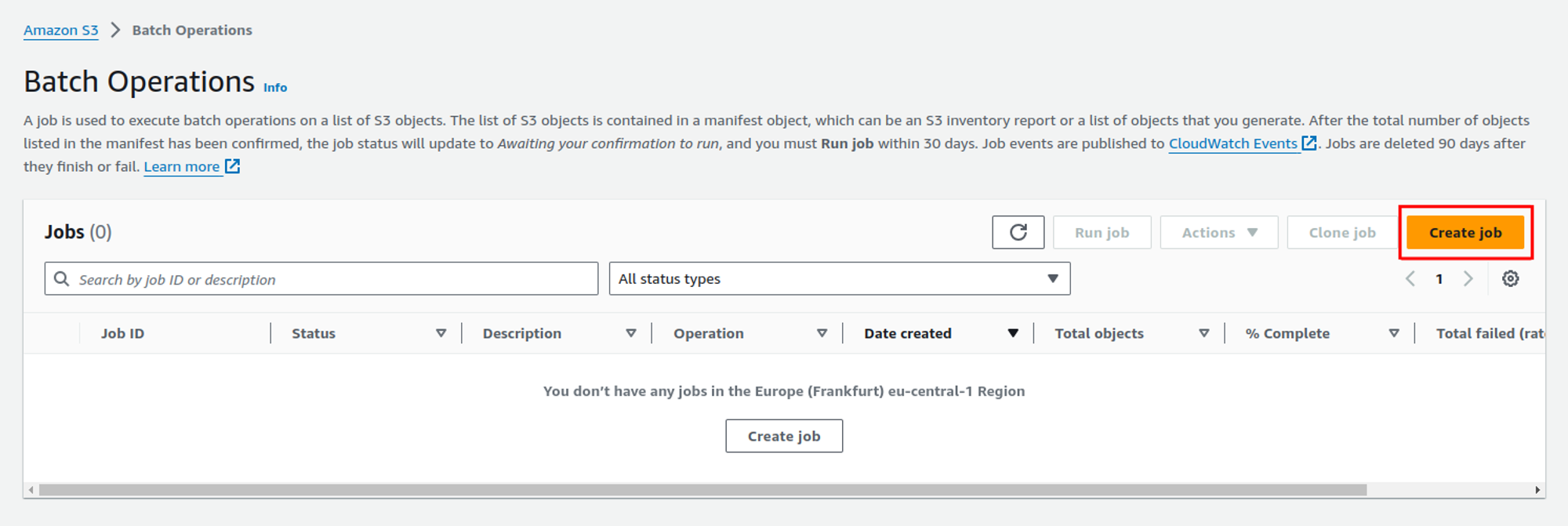

First, go to Amazon S3 → Batch Operations in your AWS console and click on Create job:

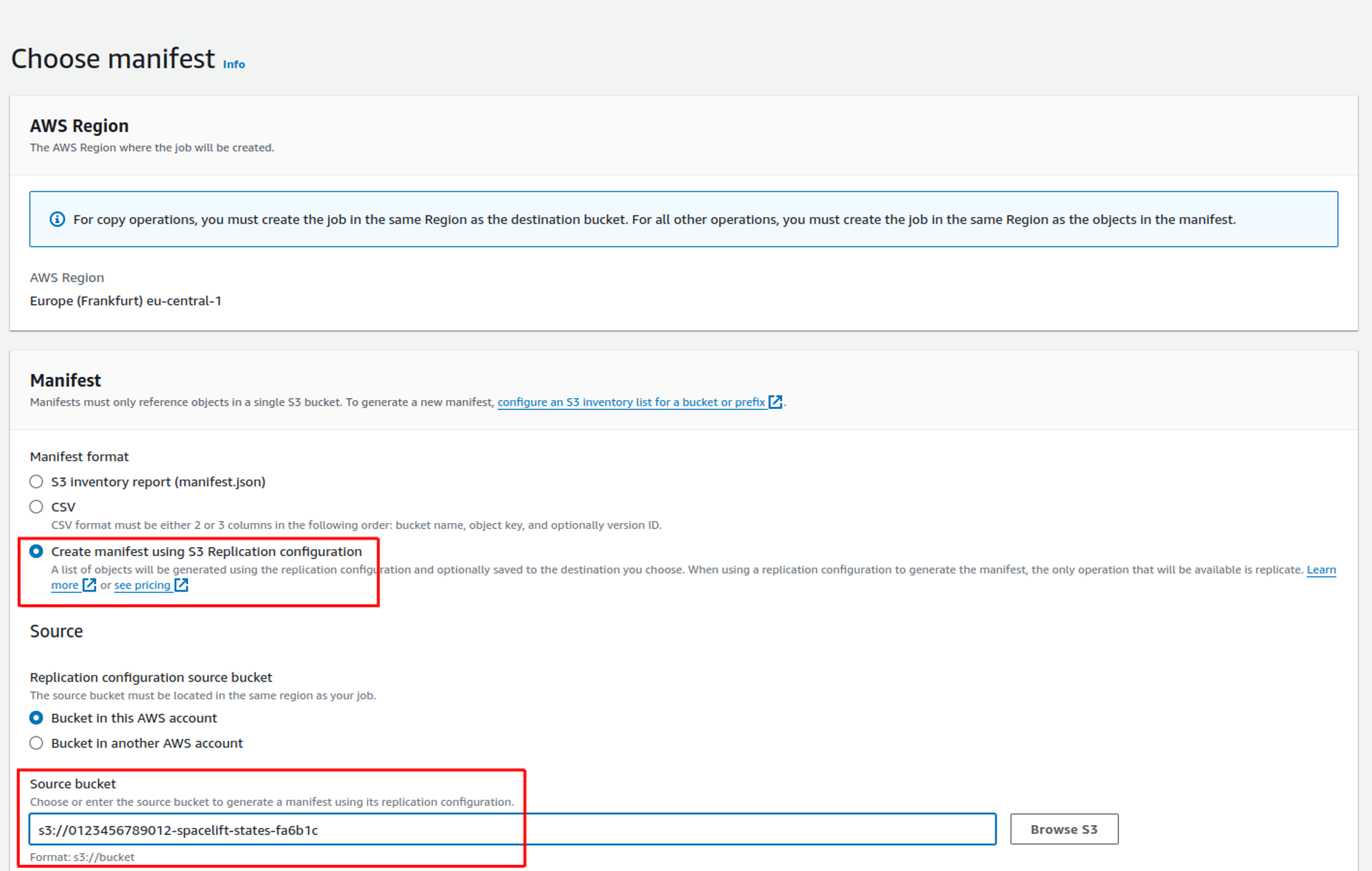

Choose manifest»

On the choose manifest screen, select the Create manifest using S3 Replication configuration option, and then choose the bucket you want to replicate:

Leave all the other options at their defaults, and click the Next button.

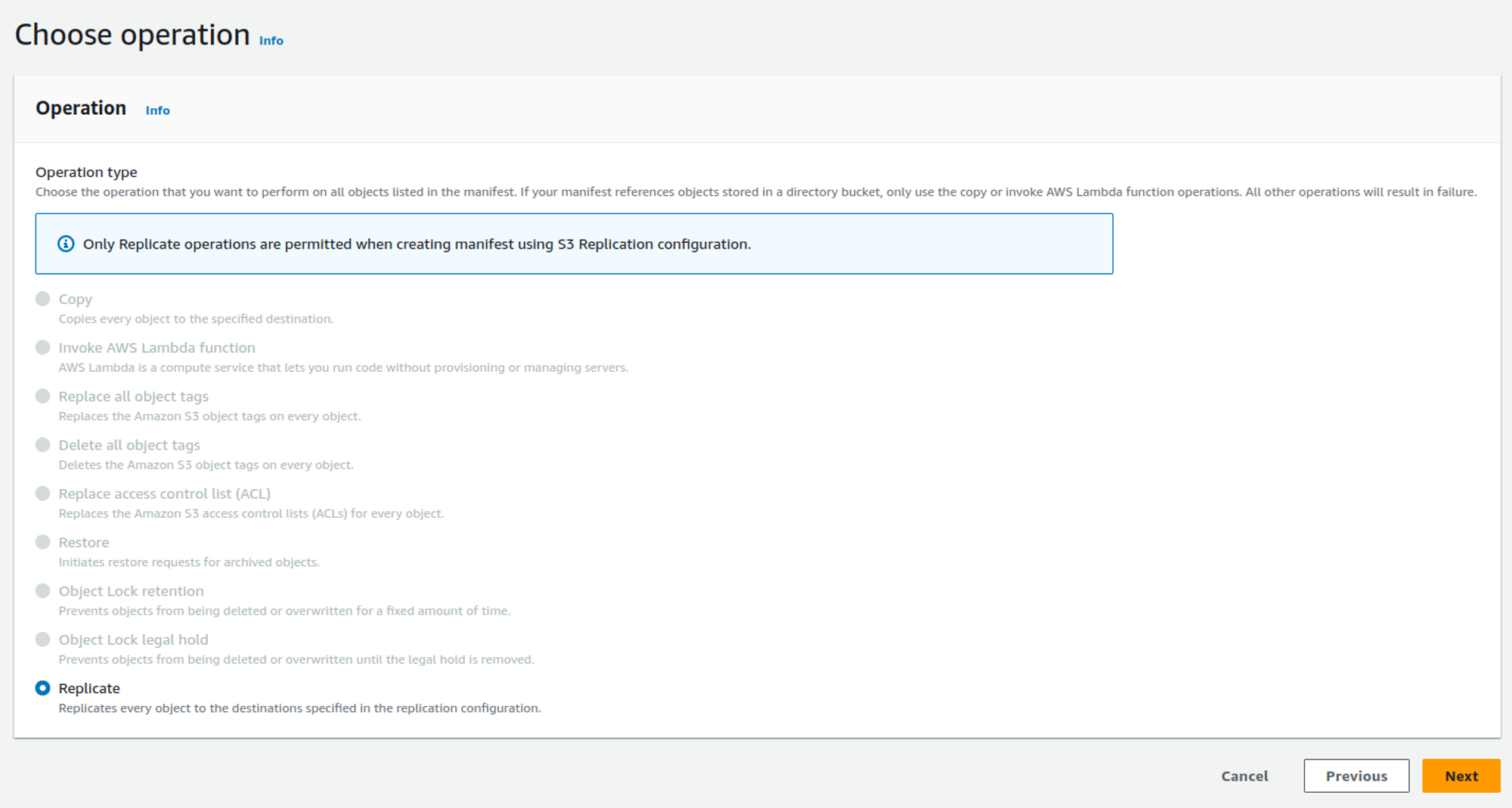

Choose operation»

The only option available on this page is Replicate. Choose it and click Next:

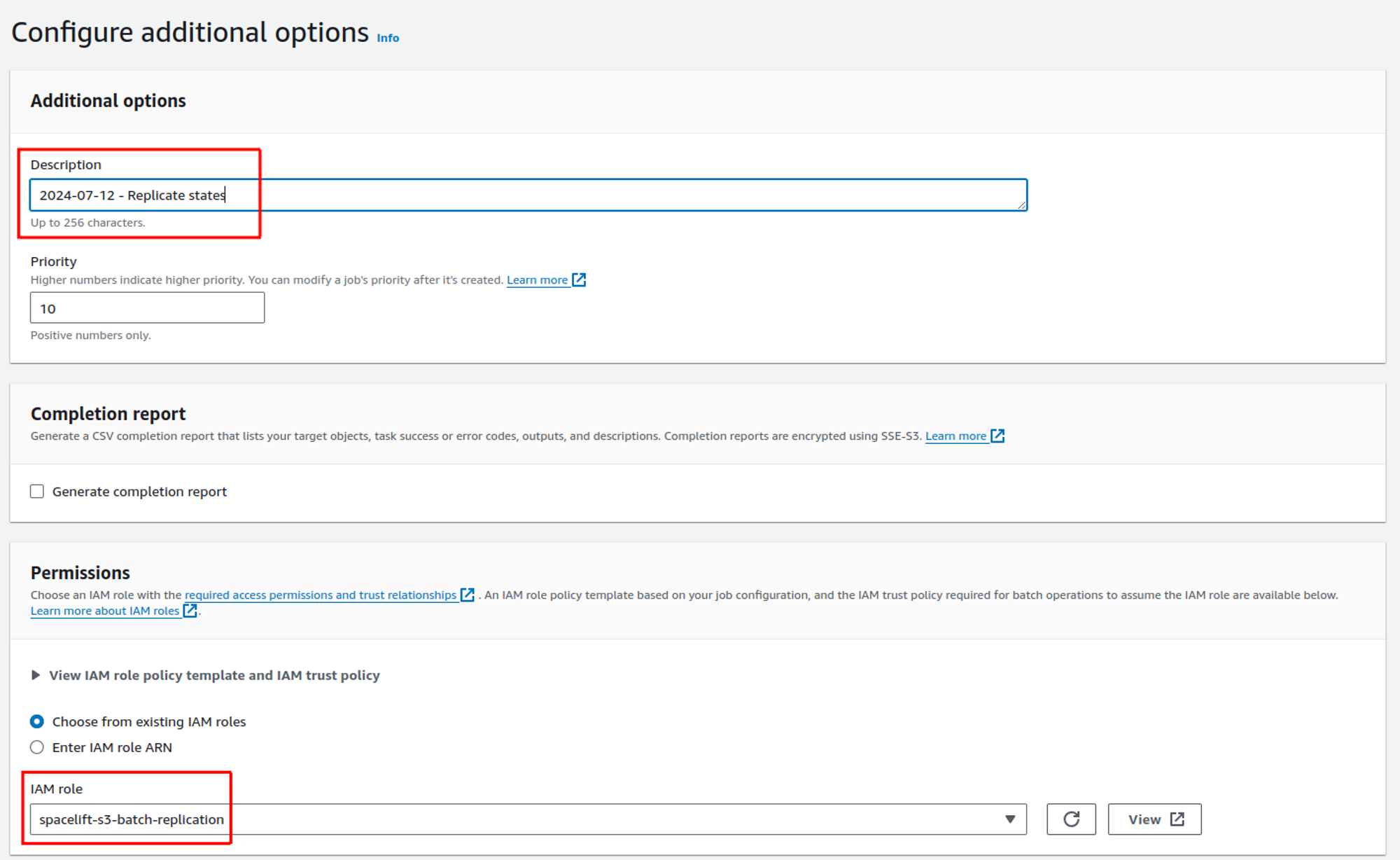

Configure additional options»

On this page, enter a description for your job, for example 2024-07-12 - Replicate states bucket.

You may also want to enable a completion report. This can be particularly useful in case any objects fail to replicate. If you want to do this, please see the AWS documentation for details on how to configure the correct IAM roles and a bucket for the report.

In the permissions section, choose the S3 replication role you created earlier.

Click Next to review your job configuration.

Review»

If everything looks good, click Create job to create the job.

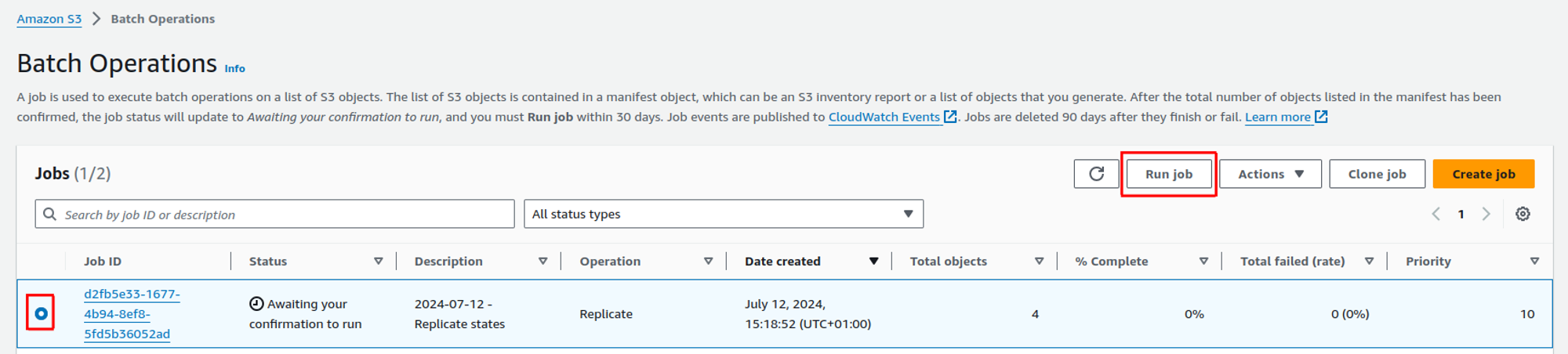

Run batch operation»

Once the job is in the Awaiting your confirmation to run status, select it in the list of jobs, and click on Run job:

On the next screen, review the job configuration, and click on the Run job button at the bottom of the screen.

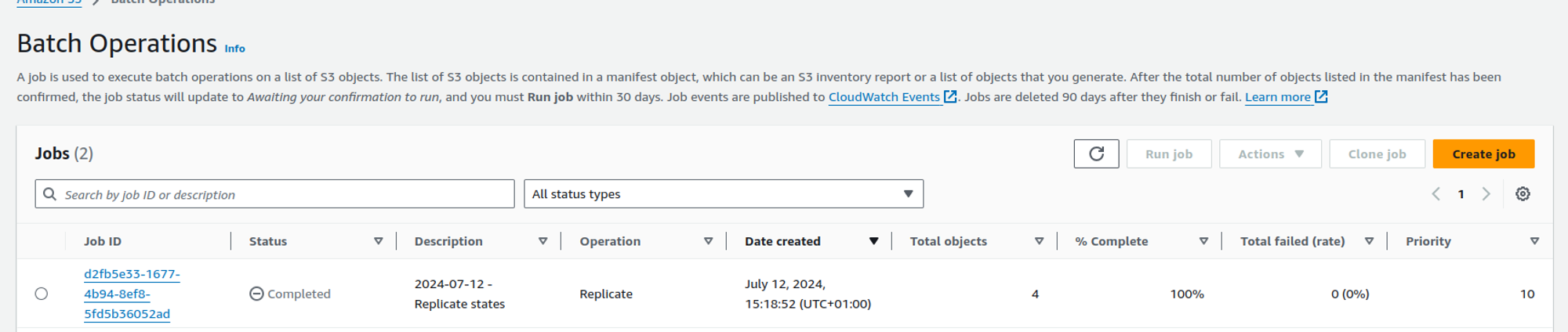

If all goes well, your job should end up in the Completed state once all the objects have successfully been replicated:

Failover runbook»

The following two sections explain how to failover to and failback from your secondary region.

Failing over»

To failover to your secondary region, use the following steps:

- Make sure your database is available for your secondary region to use in read-write mode. For example, failover to your secondary region so that the secondary DB connection is writable.

- Run the following command to start the services in your secondary region:

./start-stop-services.sh -e true -c config.dr.json(where config.dr.json contains the configuration for your secondary region).- Note: it may take a few minutes for the services to start fully.

- Update your server DNS to point at the load balancer for your secondary region.

- Update your IoT broker custom domain DNS to point at the IoT broker endpoint in your secondary region.

- Restart your workers to allow them to reconnect to the IoT broker in your secondary region. If using Kubernetes workers, restart the controller pod.

- Run the following command to stop the services in your primary region:

./start-stop-services.sh -e false -c config.json(where config.json contains the configuration for your secondary region).- Note that while you could leave the services running in your primary region, they will begin to fail and go into a crash loop because they will no-longer be able to access the Spacelift database after it is failed over to the secondary region.

Warning

After failing over to the secondary region, you will need to clear your cookies before being able to login to Spacelift.

Failing back»

- Make sure your database is available for your primary region to use in read-write mode. For example, failover to your primary region so that the primary DB connection is writable.

- Run the following command to start the services in your primary region:

./start-stop-services.sh -e true -c config.json(where config.json contains the configuration for your primary region). - Update your DNS to point at the load balancer for your primary region.

- Update your IoT broker custom domain DNS to point at the IoT broker endpoint in your primary region.

- Restart your workers to allow them to reconnect to the IoT broker in your secondary region. If using Kubernetes workers, restart the controller pod.

- Run the following command to stop the services in your secondary region:

./start-stop-services.sh -e false -c config.dr.json(where config.dr.json contains the configuration for your secondary region).- Note that while you could leave the services running in your secondary region, they will begin to fail and go into a crash loop because they will no-longer be able to access the Spacelift database after it is failed back to the primary region.

Considerations after failing over»

- If you need to run the install.sh script against your secondary instance after failing over, make sure to adjust the

disable_servicesproperty in the config file to false . Otherwise running the installer will stop your Spacelift services. - If you plan on permanently switching to the new region, you should also change the

is_dr_instanceproperty tofalse(and set it totruein your other region). This is important so that things like database migrations run successfully when new versions are installed.